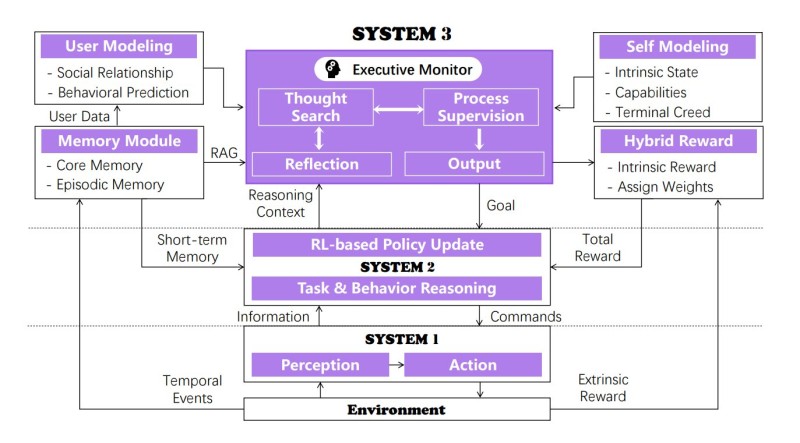

⬤ Researchers from the Shanghai Innovation Institute and Project Cuddlepark have rolled out a fresh approach to AI development called "System 3." Most AI models today work with two layers: System 1 handles quick, automatic reactions, while System 2 takes care of slower, more thoughtful reasoning. The problem? These two layers keep AI agents pretty much frozen in place, unable to grow or learn meaningfully from what they've already done.

⬤ System 3 sits on top of these existing layers and focuses on the big picture—long-term behavior, keeping a consistent sense of self, and actually getting better over time. It includes tools for monitoring performance, building models of both itself and its users, thinking reflectively, assigning rewards in smarter ways, and storing memories that stick around. All these pieces work together so an AI agent can look back at what it's done, rethink its goals, and change how it acts going forward. The team built a working version called Sophia that can plug into existing language models and make them more independent and adaptive.

⬤ The system's design shows how information moves between the outside world, what the AI sees, how it thinks, and a supervisory layer that watches over everything long-term. Reinforcement learning updates feed into System 3, which judges performance using mixed rewards and executive oversight, while memory keeps experiences connected. The goal is simple: help AI agents not just respond and think, but actually develop stable behavior patterns and understand themselves better as they operate.

⬤ This marks a real shift in AI research—moving toward systems that maintain consistent logic over time instead of just being smart at individual tasks. If more models start incorporating long-term identity, reflective thinking, and structured feedback loops, we could see significantly more capable autonomous agents that fundamentally change what we expect AI systems to do in everyday situations.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi