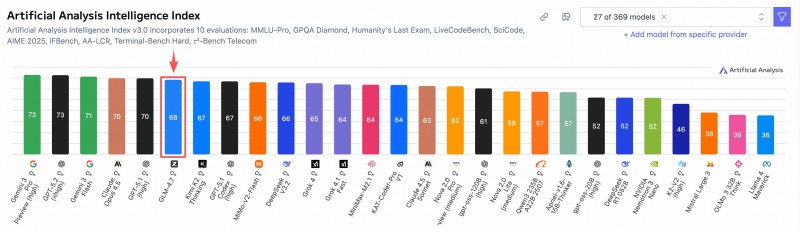

⬤ GLM-4.7 just scored a solid 68th spot out of 369 AI models on the Artificial Analysis Intelligence Index, putting it firmly in the upper tier of open-source alternatives. The model's showing off some serious capabilities across multiple benchmarks like MMLU-Pro and GPQA Diamond—the kind of tests that separate the real performers from the pretenders. What's really catching attention here is how an open-source model is holding its own against the big-budget proprietary systems everyone's heard of.

⬤ Here's where it gets interesting: GLM-4.7's ranking puts it alongside (and sometimes ahead of) models from the usual suspects like OpenAI's GPT variants and Google's Gemini lineup. The Artificial Analysis Index doesn't mess around with its evaluations either—it's looking at everything from reasoning ability to practical performance. For an open-source model to crack the top 68 in that kind of company? That's saying something about where the industry's headed.

⬤ The whole open-source AI movement's been gaining serious momentum lately, and GLM-4.7's performance shows why developers are paying attention. You get flexibility, transparency, and—let's be honest—you're not locked into some corporate ecosystem. Models like this are proving you don't need a massive tech company's resources to build something competitive, which is opening doors for smaller teams and independent developers who want in on the AI revolution.

⬤ GLM-4.7's strong showing on the index isn't just a one-time win—it's positioning the model as a legitimate player for the long haul. As more developers look for alternatives to expensive proprietary solutions, rankings like this 68th place finish make open-source options harder to ignore. The AI landscape's shifting, and models like GLM-4.7 are proving that open-source isn't just keeping up anymore—it's actually competing where it counts.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi