Large language models are making serious headway in healthcare, but accuracy alone doesn't equal clinical safety. A new study reveals that while AI can name diseases with near-physician precision, it struggles with figuring out whether a patient needs immediate care or can wait. This gap between diagnostic skill and medical judgment could determine whether AI becomes a trusted clinical assistant or remains a risky experiment.

The Study and Its Findings

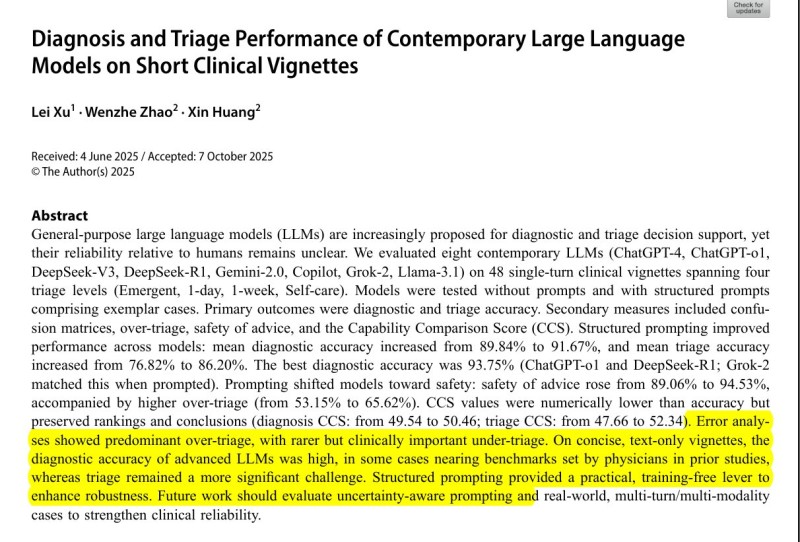

Ethan Mollick, professor at the Wharton School, highlighted findings from researchers Lei Xu, Wenzhe Zhao, and Xin Huang (2025).

They tested eight leading AI models—ChatGPT-4, ChatGPT-o1, DeepSeek-V3, DeepSeek-R1, Gemini-2.0, Copilot, Grok-2, and Llama-3.1—on 48 clinical scenarios. Each case fell into one of four urgency categories:

- Emergent (needs immediate care)

- 1-day (should see a doctor within 24 hours)

- 1-week (can wait up to a week)

- Self-care (manageable at home)

With structured prompting—giving AI worked examples beforehand—the best models hit 93.75% diagnostic accuracy, close to the 96% seen among primary care physicians. Safety scores jumped from 89% to 95%, showing real improvement.

However, triage remains problematic. While AI excels at naming diseases, it struggles with deciding urgency. As AI researcher Rohan Paul noted, "Naming a likely disease is easy for LLMs, but making safe urgency calls is not." Most models over-triaged, recommending urgent care too often, which could overwhelm emergency departments. Worse, some under-triaged cases needing immediate attention. Structured prompting that improved diagnosis also increased over-triage from 53% to 65%.

Why This Matters

The gap reveals fundamental AI limitations. Recognizing text patterns differs from assessing risk under uncertainty. Real doctors weigh probabilities, consider patient history, and balance consequences—judgment calls that text-trained AI can't reliably handle.

Future work needs AI systems that express confidence levels, flag unclear cases for human review, and balance safety against resource constraints. Testing must move beyond single questions into conversations mirroring real consultations.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah