● In a recent tweet, phil beisel shared Tesla's AI chip development plans, and it's pretty wild. These chips are built specifically to run AI in real time—the kind that powers Full Self-Driving cars and Tesla's Optimus robot. They're also used in Tesla's data centers to run massive simulations and train better AI models.

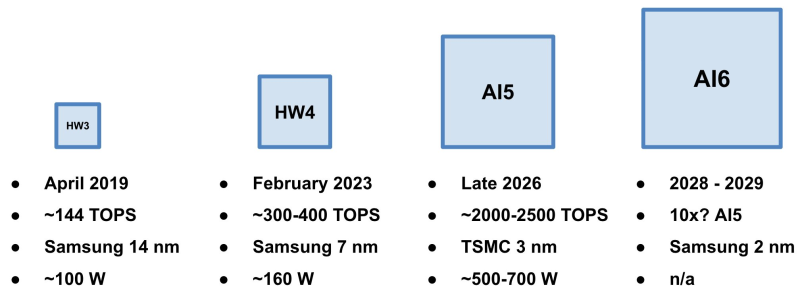

● Here's how the hardware evolved: Tesla started with HW3 back in April 2019 (~144 TOPS on Samsung's 14nm process), then jumped to HW4 in early 2023 (~300-400 TOPS, Samsung 7nm). Coming up is AI5 in late 2026 (~2000-2500 TOPS, TSMC 3nm, using 500-700W), and eventually AI6 around 2028-2029 (Samsung 2nm, estimated to be 10× faster than AI5).

● That performance boost is impressive, but it comes with challenges. These chips will consume way more power, run hotter, and depend heavily on Samsung and TSMC to manufacture them. If there's a supply chain hiccup or geopolitical issues, Tesla's timeline could take a hit—especially with AI6 pushing into cutting-edge 2nm territory.

● By designing its own chips, Tesla is cutting out middlemen like Nvidia, potentially saving billions while building exactly what it needs. The AI5 and AI6 chips aren't just for cars either—they'll handle cloud simulations, training workloads, and even edge computing for Starlink. This could turn Tesla into more than just a car company; it could become a major AI hardware player, controlling its own destiny and costs.

● Tesla's roadmap shows it's aiming to become the world's biggest maker of AI-powered physical machines—robots and vehicles that think and act on their own. By combining robotics, cars, and distributed AI computing, Tesla has positioned itself uniquely as the race for efficient AI chips heats up. If they pull it off, Tesla could shape the entire next generation of industrial AI.

Peter Smith

Peter Smith

Peter Smith

Peter Smith