⬤ A powerhouse academic team from Shanghai AI Laboratory, University of Science and Technology of China, and Peking University just unveiled UniPercept—a model that wants AI to see images the way we actually experience them. The big question they're tackling: can AI move past the "what's in this picture" phase and start reasoning about how an image actually looks and feels visually?

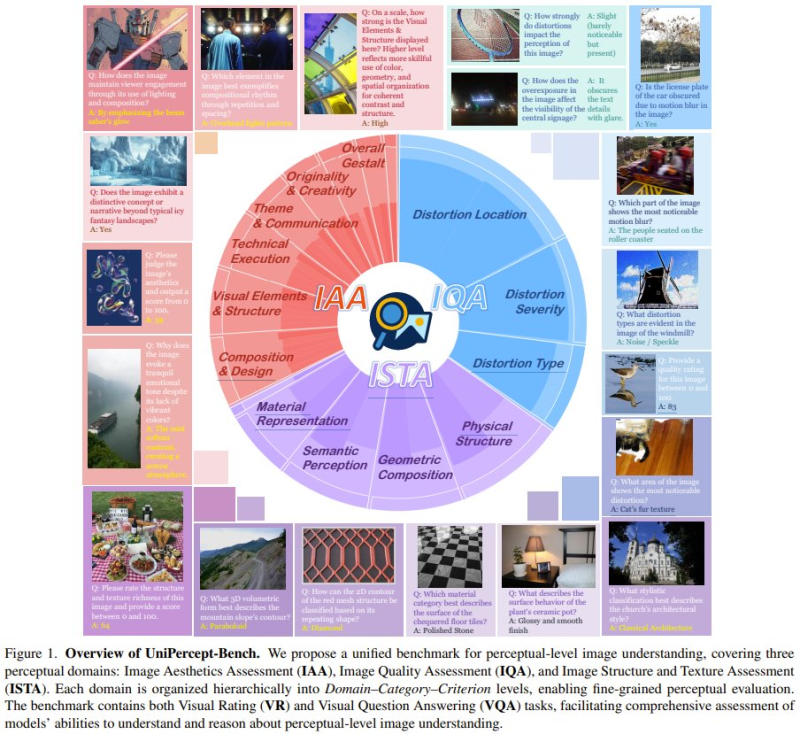

⬤ UniPercept gets trained on four core perceptual angles: aesthetics, visual quality, structure, and texture. Instead of just slapping semantic labels on things, it's evaluating composition, visual balance, distortion levels, material appearance, and how space is organized in an image. To back this up, the team built UniPercept-Bench—a unified benchmark specifically for perceptual-level image understanding.

⬤ UniPercept-Bench breaks down into three main domains: Image Aesthetics Assessment (IAA), Image Quality Assessment (IQA), and Image Structure and Texture Assessment (ISTA). Each domain gets organized hierarchically through domain, category, and criterion levels for super detailed evaluation. The benchmark runs two types of tasks—Visual Rating (VR) where models score perceptual qualities, and Visual Question Answering (VQA) where they have to explain what they're seeing and why it looks that way.

⬤ The results show UniPercept beating existing multimodal models on this benchmark, especially when it comes to explaining visual characteristics. This work signals where computer vision research is headed—less about what's in the frame, more about understanding how images are actually perceived by human eyes.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah