⬤ MAXS (Meta-Adaptive Exploration with LLM Agents) just landed as a fresh approach to making AI agents think further ahead instead of just reacting to what's right in front of them. The framework gives agents actual foresight by running simulations of possible future actions and checking which paths stay stable before committing to a decision. It's a big shift from the usual "pick the best next step and hope it works out" method most agents use today.

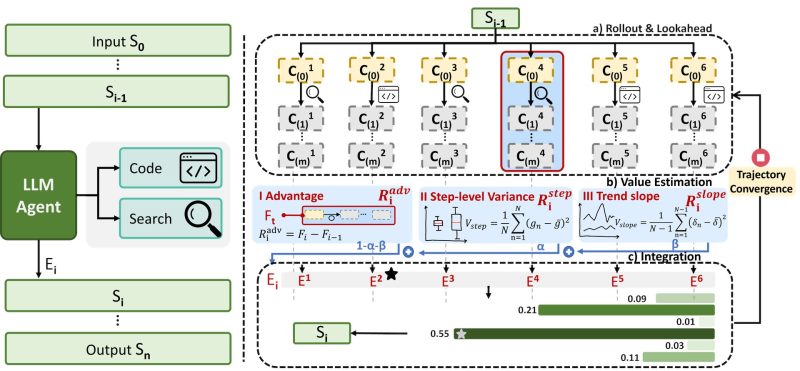

⬤ Here's how it works: the agent generates multiple possible action sequences from any given moment, then tests each one across several steps into the future. The diagram shows each potential path getting evaluated as it unfolds, letting the agent spot which decisions might look good now but fall apart later. It's designed to stop that classic AI problem where grabbing the obvious choice early on paints you into a corner down the line.

⬤ The framework judges these paths using a combo of signals—advantage estimation, step-level variance, and trend slope. Basically, it's measuring whether a trajectory stays consistent and stable as it plays out. If a path shows wild swings or unstable patterns, MAXS filters it out before it becomes a problem. This matters most in scenarios where agents juggle multiple tools and need reasoning that holds up over time, not just for one quick move.

⬤ MAXS represents where agent research is heading: less focus on nailing individual steps, more emphasis on whether the whole plan actually makes sense from start to finish. By prioritizing trajectory stability and convergence over instant correctness, it's pushing toward AI agents that can handle complex environments requiring sustained reasoning and adaptive decision-making without constantly second-guessing themselves or veering off track.

Peter Smith

Peter Smith

Peter Smith

Peter Smith