⬤ Google's AI hardware strategy is gaining serious traction as Tensor Processing Units see wider adoption across major cloud workloads. TPUs represent Google's most successful in-house chip development effort, with usage expanding rapidly through Google Cloud infrastructure. There's growing speculation about potential direct sales channels opening up, which could shake up the current market dynamics.

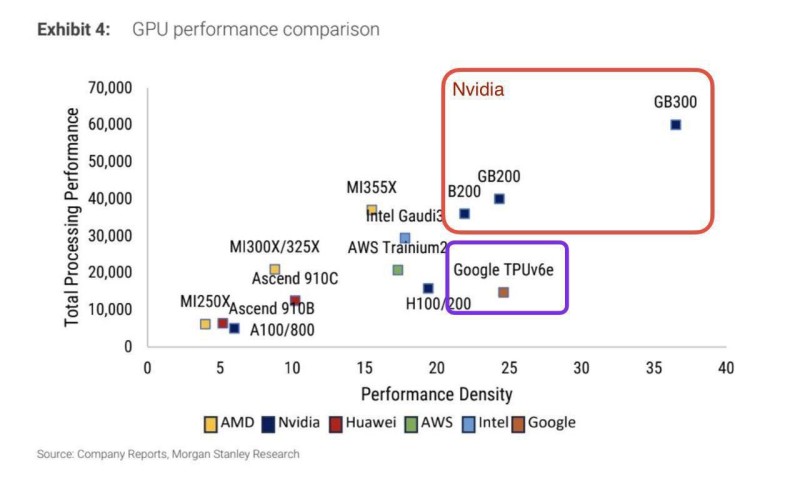

⬤ Here's the thing: newly released performance charts show Google's TPUv6e lineup holding its own in the efficiency game. The comparison stacks chips from Nvidia, Google, AMD, Intel, Huawei, and AWS side by side. Sure, Nvidia's GB200 and GB300 still dominate the raw power rankings, but Google's TPUv6e lands in a sweet spot that balances strong efficiency with solid throughput numbers. That positioning makes sense when you consider Google isn't trying to outmuscle Nvidia's flagship processors—they're optimizing for large-scale training within their own ecosystem.

⬤ The scale we're talking about here is massive. Back in October, Anthropic and Google announced plans to deploy over one million TPUs for training future Claude models. That's not just big—it's a clear signal that top-tier AI developers see real value in Google's hardware approach. As TPU integration deepens across Google Cloud, expect more organizations to fold Google's chips into their training infrastructure, especially for workloads demanding serious scale.

⬤ Let's be real: competition in AI hardware is heating up fast, and demand for training capacity keeps climbing. Google's ability to push TPU adoption further could reshape cloud market dynamics, shift the performance-versus-efficiency conversation, and influence how companies think about long-term infrastructure strategy across the AI sector.

Usman Salis

Usman Salis

Usman Salis

Usman Salis