⬤ A gradient-free training method called EGGROLL is catching attention for its potential to dramatically accelerate AI model development. The technique offers an alternative to traditional gradient-based training that dominates the field today. Performance data released alongside the announcement shows substantial gains that have researchers talking.

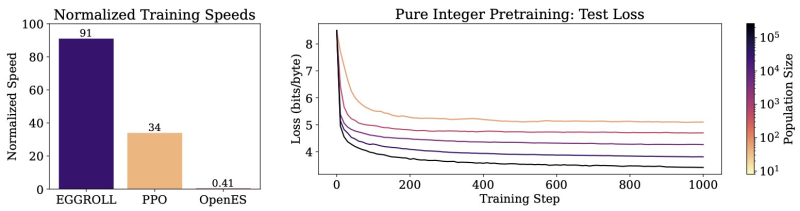

⬤ The numbers tell an impressive story. EGGROLL hits a normalized training speed of 91, crushing PPO's 34 and leaving OpenES far behind at just 0.41. The method delivers 100× better training throughput compared to standard evolutionary strategies while slashing memory requirements by another 100×. It keeps inference speed at 91 percent and works with pure integer training. Test results show that larger population sizes consistently achieve lower loss across extended training runs, backing up claims about real-world usefulness.

⬤ This fits right into the current push for faster, leaner AI training as models get bigger and more demanding. NVIDIA stays at the center of these conversations since gains in training speed and memory use directly impact how developers leverage GPU infrastructure. The performance charts explain why EGGROLL quickly became a hot topic in research communities and technical forums.

⬤ EGGROLL matters because training efficiency is now one of the biggest bottlenecks in scaling AI systems. Methods that cut compute and memory demands can influence everything from hardware decisions to model architecture and AI economics overall. As training costs climb, approaches that work well with existing hardware or reduce compute pressure could shift expectations around development timelines, industry momentum, and market outlook.

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova