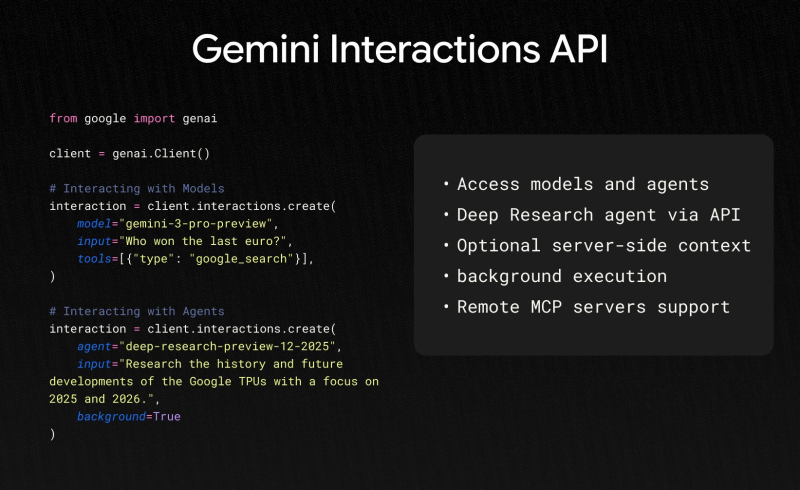

⬤ Google DeepMind released its Gemini Interactions API in beta, positioning it as a single framework where developers can work with both Gemini models and AI agents. The API is becoming the primary interface for agentic capabilities heading into 2026, giving developers one access point to Gemini models and the Deep Research agent. The goal is making it simpler to build applications that leverage both model intelligence and autonomous research functions.

⬤ Right now, the API supports unified access to Gemini models and agents, optional server-side state management, background execution, and Remote Model Context Protocol (MCP) connectivity. Sample code from the announcement demonstrates querying Gemini-3-Pro-Preview with built-in tools like Google Search and running Deep Research agents in the background while the server handles context. Coming features include combining function calling, MCP and built-in tools (Google Search, File Search) in one interaction, fine-grained streaming for function calls, multimodal function calling, and new Gemini-powered agents rolling out across the GOOGL ecosystem.

⬤ Server-side context handling means long-running interactions get managed centrally instead of locally, while background execution lets tasks continue independently. Remote MCP server support expands which tools and data sources agents can securely tap into. These capabilities are built to make Gemini more adaptable for research workflows, enterprise automation and knowledge-heavy applications running on GOOGL's AI infrastructure.

⬤ This API expansion marks a strategic shift toward agent-centric platforms where models coordinate tools, search systems and structured workflows instead of just generating content. As AI adoption picks up globally, unified APIs like this could streamline integration, broaden developer reach and fuel innovation around the Gemini ecosystem and GOOGL's broader tech stack.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi