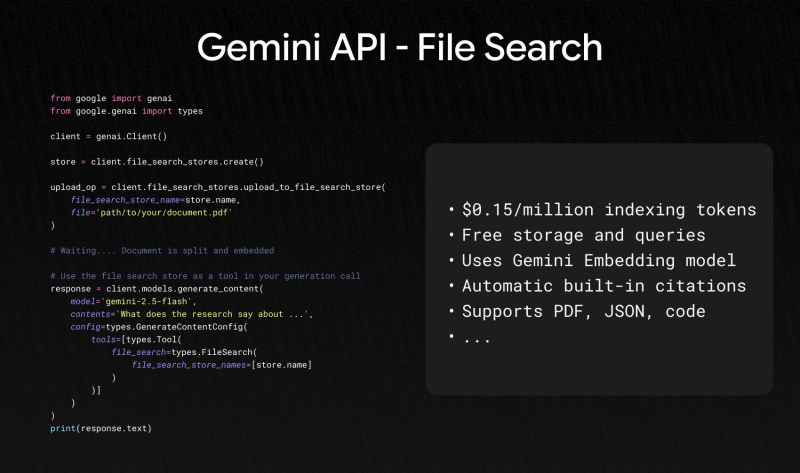

● Google just rolled out Gemini File Search, a fully managed RAG tool that lets developers ground Gemini models in their own private data. According to Philipp Schmid, the system makes it much easier to build accurate, verifiable AI responses by connecting models directly to user documents. Google AI Developers called it "simple, integrated and affordable," highlighting that it's baked right into the Gemini API without needing separate infrastructure.

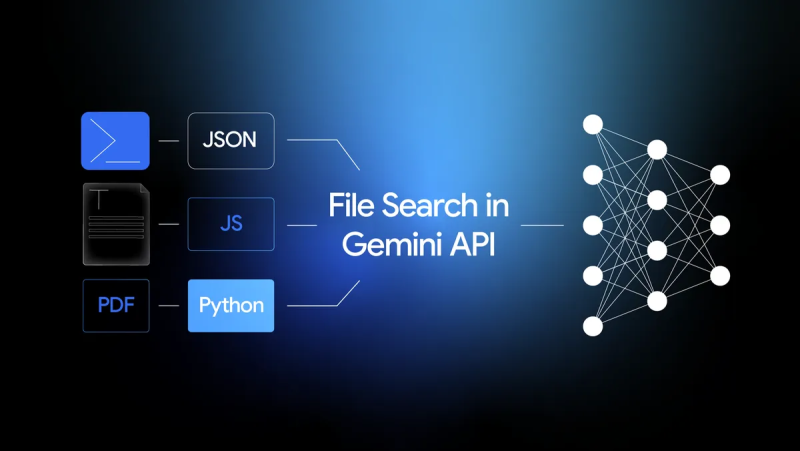

● The pricing is straightforward: $0.15 per million indexing tokens, with free storage and embedding at query time. You get 1 GB free storage, then scale up through 10 GB, 100 GB, or 1 TB tiers depending on your needs. File Search works with PDFs, DOCX, TXT, JSON, and most programming files, so you can ground responses across docs, research, or entire codebases. It uses Gemini's embedding model for vector search and automatically cites which documents it pulled from—crucial for teams that need transparent, auditable AI.

● What makes this launch significant is how much it simplifies the RAG pipeline. The system handles document splitting, embedding, indexing, and storage automatically. It can combine results from parallel queries in under two seconds, cutting out tons of engineering work. Once it's set up, File Search plugs straight into Gemini generation calls through a single API setting.

● This release signals where enterprise AI is headed. By making RAG cheaper, faster, and more transparent with built-in citations, Google is pushing Gemini as the go-to platform for trustworthy AI applications. As more companies demand systems that cite sources and work securely with private data, File Search could become a core part of how businesses use Gemini.

Usman Salis

Usman Salis

Usman Salis

Usman Salis