The race for AI video supremacy has long been seen as a playground for trillion-dollar tech giants with unlimited resources. But a recently updated global leaderboard is challenging that assumption. A compact research team of roughly 50 people has climbed into the top ranks of the world's best AI video generators, going head-to-head with industry heavyweights and holding their ground.

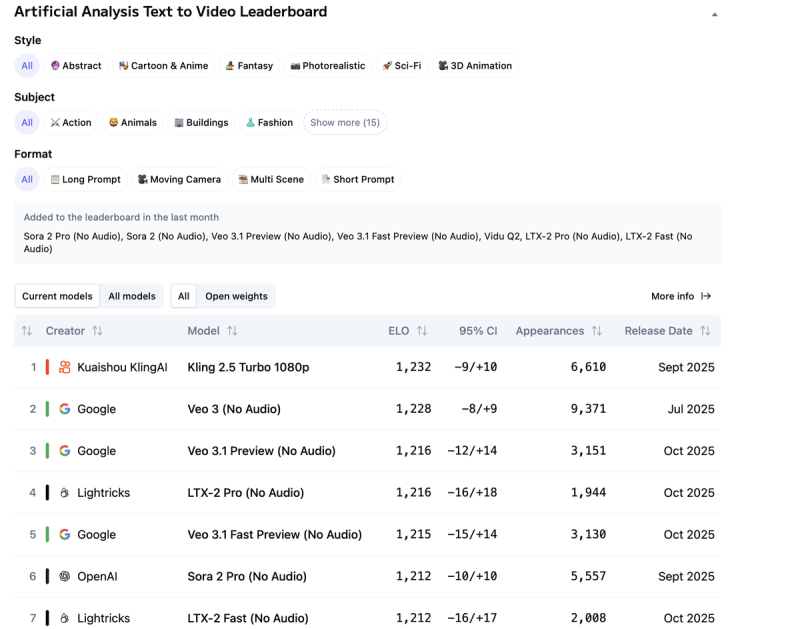

A recent update to the Artificial Analysis Text-to-Video Leaderboard has sparked debate about whether massive compute power is really the only route to building world-class AI models.

Small Team Breaks Into Elite Rankings

According to trader Chubby, the leaderboard reveals an unexpected shift in who's leading the text-to-video space. While companies like Google, Kuaishou, and OpenAI still dominate, one standout model comes from Lightricks—a team dramatically smaller than the typical AI mega-lab.

What makes this particularly striking is that Lightricks is competing with companies that have orders of magnitude more computing resources, yet they've managed to match Google's third-place model in ELO score.

The current top performers include:

- Kuaishou KlingAI – Kling 2.5 Turbo 1080p (ELO: 1,232 | Release: Sept 2025 | Appearances: 6,610)

- Google – Veo 3 No Audio (ELO: 1,228 | Release: Jul 2025 | Appearances: 9,371)

- Google – Veo 3.1 Preview No Audio (ELO: 1,216 | Release: Oct 2025 | Appearances: 3,151)

- Lightricks – LTX-2 Pro No Audio (ELO: 1,216 | Release: Oct 2025 | Appearances: 1,944)

- OpenAI – Sora 2 Pro No Audio (ELO: 1,212 | Release: Sept 2025 | Appearances: 5,557)

This is exactly the kind of performance most people assumed only hyperscale labs could pull off.

Why This Actually Matters

This isn't just about bragging rights on a leaderboard. It points to something bigger: elite performance in AI video generation is no longer locked behind the doors of trillion-dollar companies. For years, the industry narrative centered on billion-dollar GPU clusters, massive proprietary datasets, and ever-larger model architectures. But these rankings suggest that smart innovation and efficiency can go toe-to-toe with raw computing power.

What's becoming clear is that talent density now matters as much as compute density. Smaller teams can move faster, iterate more aggressively, and experiment with novel architectures, often achieving outsized results compared to their resources. Video generation tools and training strategies are improving so quickly that the barrier to entry for high-quality models keeps dropping. And the competition is heating up—OpenAI, Google, and Kuaishou no longer have the field to themselves. Smaller labs are showing up with equal technical sophistication.

A Pattern Emerging Across AI

This trend isn't unique to video generation. Across the AI industry, we're seeing startups release efficient multimodal models that rival products from much larger labs. Open-weights video models are catching up to early proprietary systems, and small research teams are making breakthroughs in diffusion video generation without billion-dollar infrastructure backing them. As one researcher recently put it, "We're entering the era where smarter beats bigger."

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah