⬤ Anthropic just dropped a framework that's catching fire in the developer community. Their latest guide tackles the biggest pain points in AI agent development: massive token bills, sluggish performance, and tangled tool workflows. The timing couldn't be better—developers are drowning in bloated context windows and wrestling with increasingly messy tool-calling setups.

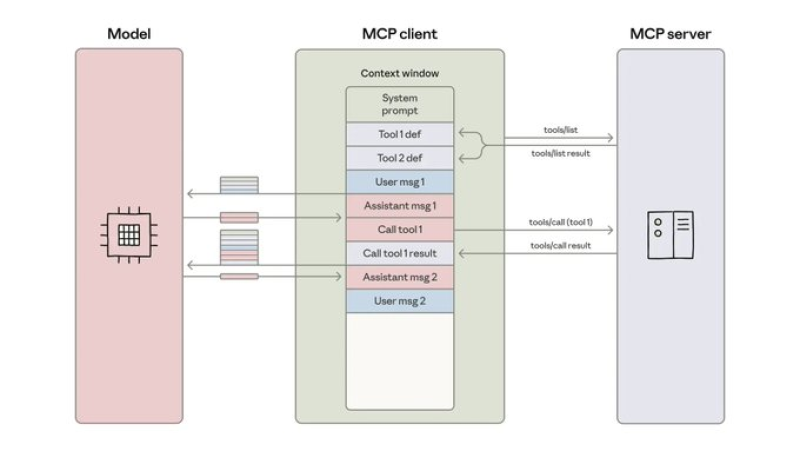

⬤ Here's the core problem they're solving: current agent setups load every MCP tool definition right from the start, burning through 150,000+ tokens before doing any real work. Anthropic's solution? Treat MCP servers like code libraries. Instead of dumping everything into the model's context, agents import tools as TypeScript APIs and call them programmatically. The results are dramatic—token usage drops by over 98%, from 150k down to around 2k per workflow.

⬤ Ignoring these optimizations isn't just wasteful—it's risky. Teams that stick with old patterns face mounting costs, slower agents, and workflows that break during complex tool chains. For startups and smaller teams, these issues can be deal-breakers, forcing them out of AI development entirely. Anthropic recommends progressive tool discovery, filtering data before it hits the model, and using native control flow to keep things lean.

⬤ There's a privacy win here too. Since sensitive data can stay in the execution environment without entering the model's context, your secrets actually stay secret. The guide also shows how agents can save their state, pause and resume tasks, and build up libraries of reusable skills that get smarter over time.

⬤ As AI agents get more sophisticated, Anthropic's blueprint offers a practical roadmap. By combining aggressive token optimization, smarter tool handling, better privacy, and reusable components, they're sketching out what the next wave of AI agents should look like—powerful but actually affordable to run.

Usman Salis

Usman Salis

Usman Salis

Usman Salis