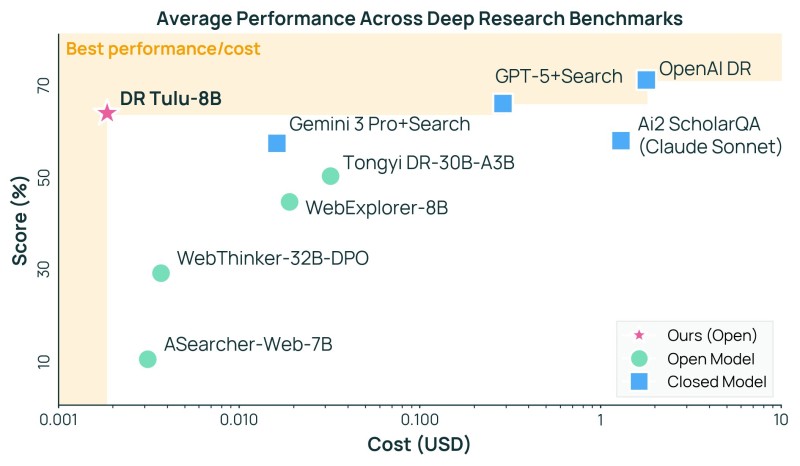

⬤ The Allen Institute for AI rolled out Deep Research Tulu, a fully open framework built for long-form reasoning and structured research. The highlight is DR Tulu-8B, a new reinforcement-learning checkpoint that lands right on the performance-cost sweet spot. Benchmark data shows DR Tulu-8B beating larger models like Tongyi DR-30B and WebExplorer-8B, while matching OpenAI DR and Gemini 3 Pro+Search at a much lower price point.

⬤ DR Tulu-8B is now live on arXiv with open code and a lightweight CLI demo anyone can run cheaply. Deep Research Tulu gives users the tools to train agents that plan, search, synthesize, and cite across multiple sources—basically democratizing expert-level research workflows. The benchmarks put DR Tulu-8B near 70% accuracy while keeping costs among the lowest on the chart, proving the efficiency of this updated checkpoint.

⬤ The data also shows DR Tulu-8B outpacing several open models—WebThinker-32B-DPO, ASearcher-Web-7B, and Tongyi DR-30B-A3B—which fall short on accuracy or cost more to run. Meanwhile, closed systems like OpenAI DR, GPT-5+Search, and Ai2 ScholarQA (Claude Sonnet) sit far to the right on the cost scale, making the open-weight model's affordability stand out. The star marker in the "Best performance/cost" zone visually confirms DR Tulu-8B's edge as an unusually efficient choice for deep-research work.

⬤ Deep Research Tulu and DR Tulu-8B's strong showing signal a real shift toward open systems challenging proprietary long-context models. As efficiency and capability come together, the line between open and closed research tools keeps shifting—and that's reshaping how advanced reasoning systems get adopted across academic, tech, and business settings.

Peter Smith

Peter Smith

Peter Smith

Peter Smith