● Jeff Huber recently shared his excitement about fresh empirical research on embeddings in code retrieval, saying "finally someone did the empirical work on embeddings in code retrieval." He pointed out that semantic retrieval helps users "require fewer iterations to arrive at a correct solution," making coding workflows smoother and faster. He also mentioned he's looking forward to seeing data on speed improvements next.

● The research comes from Cursor's team, who tested how semantic search impacts AI model performance in software development. As Cursor explained, "semantic search improves our agent's accuracy across all frontier models, especially in large codebases where grep alone falls short." This matters because traditional keyword searches often miss the deeper connections in code that developers actually need.

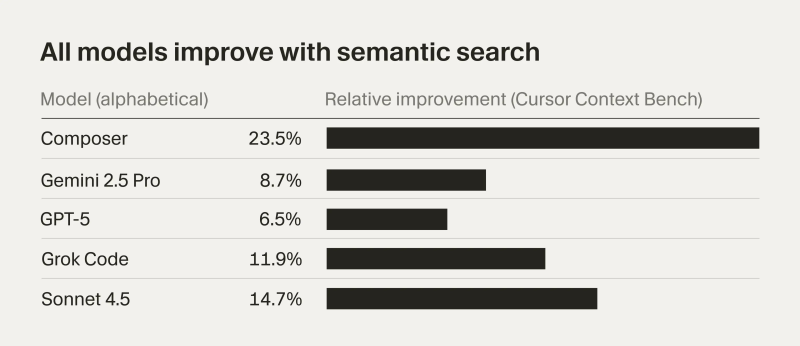

● The benchmark results show universal improvements: Composer saw the biggest boost at 23.5%, followed by Sonnet 4.5 at 14.7%, Grok Code at 11.9%, Gemini 2.5 Pro at 8.7%, and GPT-5 at 6.5%. Even though the gains vary by model, every single one improved with semantic search.

● For developers working in large, complex codebases, this means finding relevant functions faster, getting better context, and spending less time fixing mistakes. Both Jeff Huber and Cursor's findings suggest that semantic search is becoming essential for AI-powered coding tools, with proven benefits across all frontier models.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah