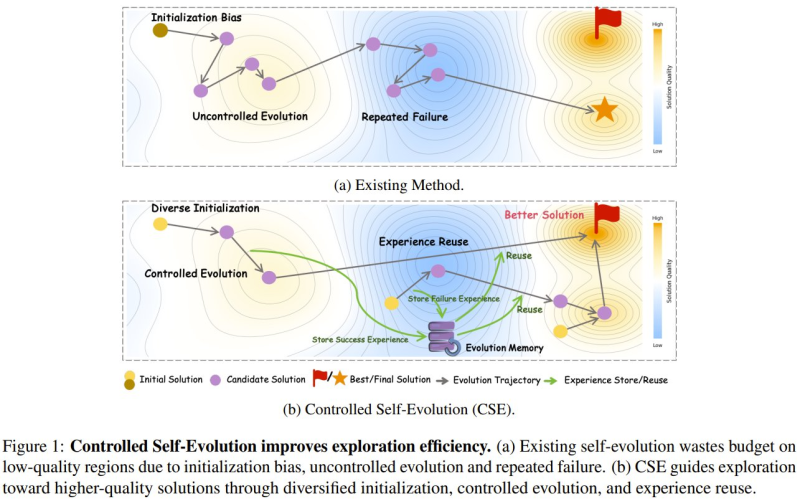

⬤ A collaboration between researchers from Nanjing University, Peking University, Midea-AIRC, East China Normal University, Sun Yat-sen University, Renmin University of China, and QuantaAlpha just dropped something big in AI code optimization. They call it Controlled Self-Evolution (CSE), and it works the way human engineers actually work—iterating, learning from mistakes, getting smarter over time. CSE runs on three core mechanics: spinning up diverse initial strategies, using feedback to guide mutations instead of random changes, and banking both wins and losses from different coding challenges.

⬤ The real power move here is how CSE just keeps getting better. Testing on the EffiBench-X benchmark showed it crushing all baseline models and improving generation after generation without hitting a ceiling. That's a genuine leap forward in algorithmic code optimization—AI that actually evolves its coding solutions systematically instead of just throwing stuff at the wall.

⬤ What separates CSE from traditional AI methods is ditching the random mutation and trial-and-error chaos. It uses strategic feedback to steer changes and learns from both successes and failures, making the whole optimization process cleaner and more effective. The code it generates isn't just faster to produce—it's higher quality too.

⬤ CSE could seriously reshape how AI handles software development. Faster code generation and optimization means shorter development cycles, fewer bugs, and leaner software systems. As AI keeps evolving, techniques like CSE will likely become standard tools in the kit, amplifying AI's impact across industries that depend on efficient code.

Peter Smith

Peter Smith

Peter Smith

Peter Smith