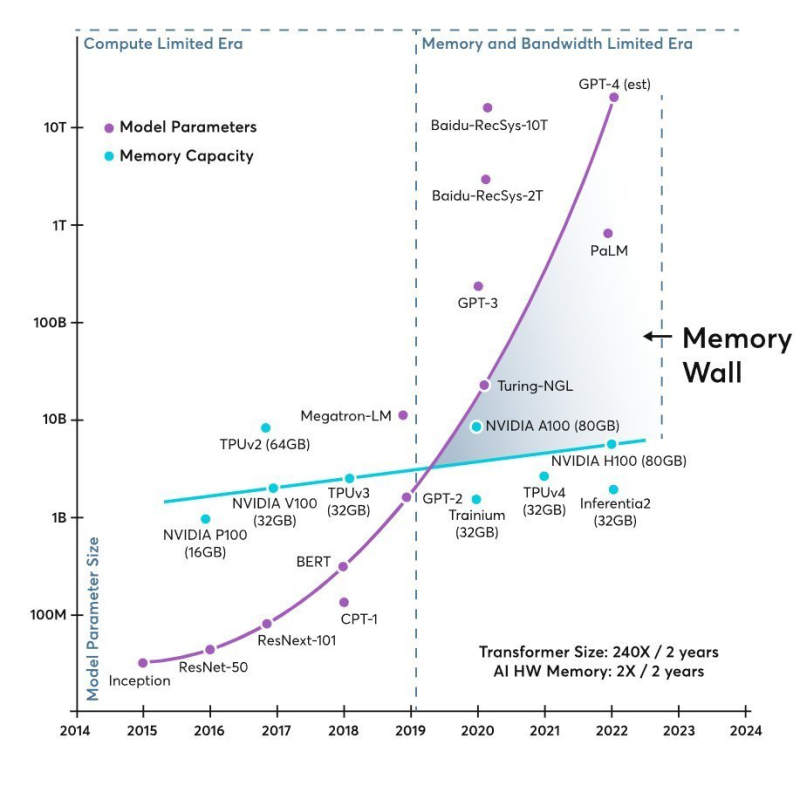

● A recent tweet from Rohan Paul has drawn attention to a fundamental challenge facing the AI industry: we're hitting the memory bandwidth wall. This hardware limitation is increasingly holding back progress in generative AI, and the numbers tell a striking story. Between 2018 and 2022, transformer models grew roughly 410 times larger every two years, while memory per accelerator only doubled in the same timeframe—a massive gap that's clearly visible in the data.

● Industry researchers are now suggesting that governments need to rethink their tax and regulatory approaches as this hardware constraint drives up AI development costs. Smaller companies are particularly vulnerable, facing potential bankruptcy as they struggle to afford cutting-edge accelerators with high-bandwidth memory. Meanwhile, top engineering talent is gravitating toward the few giants that can shoulder these expenses, accelerating both talent drain and market concentration.

●The financial stakes are high. Over the last ~20 years, peak compute rose ~60,000×, but DRAM bandwidth only ~100× and interconnect bandwidth ~30×. This means processors are sitting idle waiting for data—an inefficiency that's ballooning data center costs. Without changes to profit tax incentives or investment rules for domestic chip production, governments could see revenue losses as AI companies move their infrastructure spending to regions with more favorable terms.

● The bandwidth crisis also has broader economic implications. AI infrastructure is becoming increasingly capital-intensive while requiring less human labor. Since training models demands 3–4 times more memory than the parameter count alone, and since KV-cache operations are eating up more of the workload cost, AI companies can generate higher profits with smaller teams. This shift threatens traditional payroll-based tax systems and could reshape how governments collect revenue from the tech sector.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah