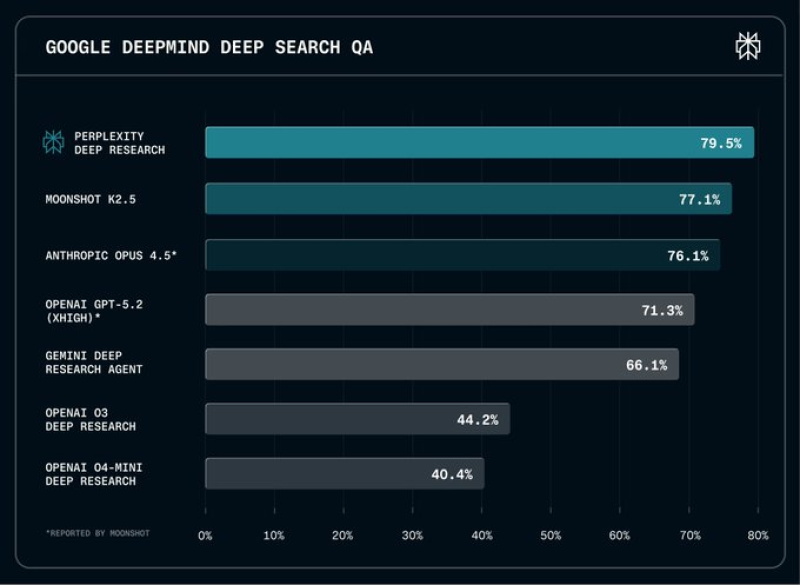

⬤ Perplexity just dropped an upgraded version of its Deep Research system and simultaneously introduced DRACO, a new benchmark designed to test how well AI handles actual research tasks. The advanced mode runs everything through Opus 4.5 and is rolling out to Max users first, with Pro users getting access within days. Right out of the gate, Perplexity Deep Research hit a 79.5 percent accuracy score on the benchmark.

⬤ DRACO evaluates three core capabilities: accuracy, completeness, and objectivity. The benchmark pulls from 100 carefully selected tasks covering academic research, finance, law, medicine, and technology. The competitive landscape became clear quickly. Moonshot K2.5 landed at 77.1 percent, while Anthropic's Opus 4.5 reached 76.1 percent. OpenAI's GPT 5.2 High scored 71.3 percent. Google's Gemini Deep Research Agent managed 66.1 percent, and OpenAI's O3 Deep Research and O4 Mini Deep Research trailed at 44.2 percent and 40.4 percent respectively.

⬤ The advanced research mode routes every query through Opus 4.5, which Perplexity says improves performance on complex reasoning problems. "We designed DRACO to measure real-world research capability across domains rather than testing narrow performance windows," the company noted in their release. Max subscribers get first access with higher usage caps, then availability expands to Pro users.

⬤ These results show the AI research race heating up fast, with major players from Google, OpenAI, and Anthropic all competing for dominance. The standardized testing reveals where each system stands on multi-domain reasoning as companies work to improve reliability and research accuracy across the board.

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova