⬤ OpenAI's recent benchmark results have sparked conversation after performance data surfaced online, showing a massive drop in AI intelligence costs. GPT-5.1 (Thinking High) now costs roughly 300× less per task than o3-preview (Low) while scoring just slightly lower on the ARC-AGI-1 reasoning test. Sam Altman responded to the findings, saying he's "consistently underestimated" how quickly price-per-intelligence drops, calling a 300× improvement in one year "nuts."

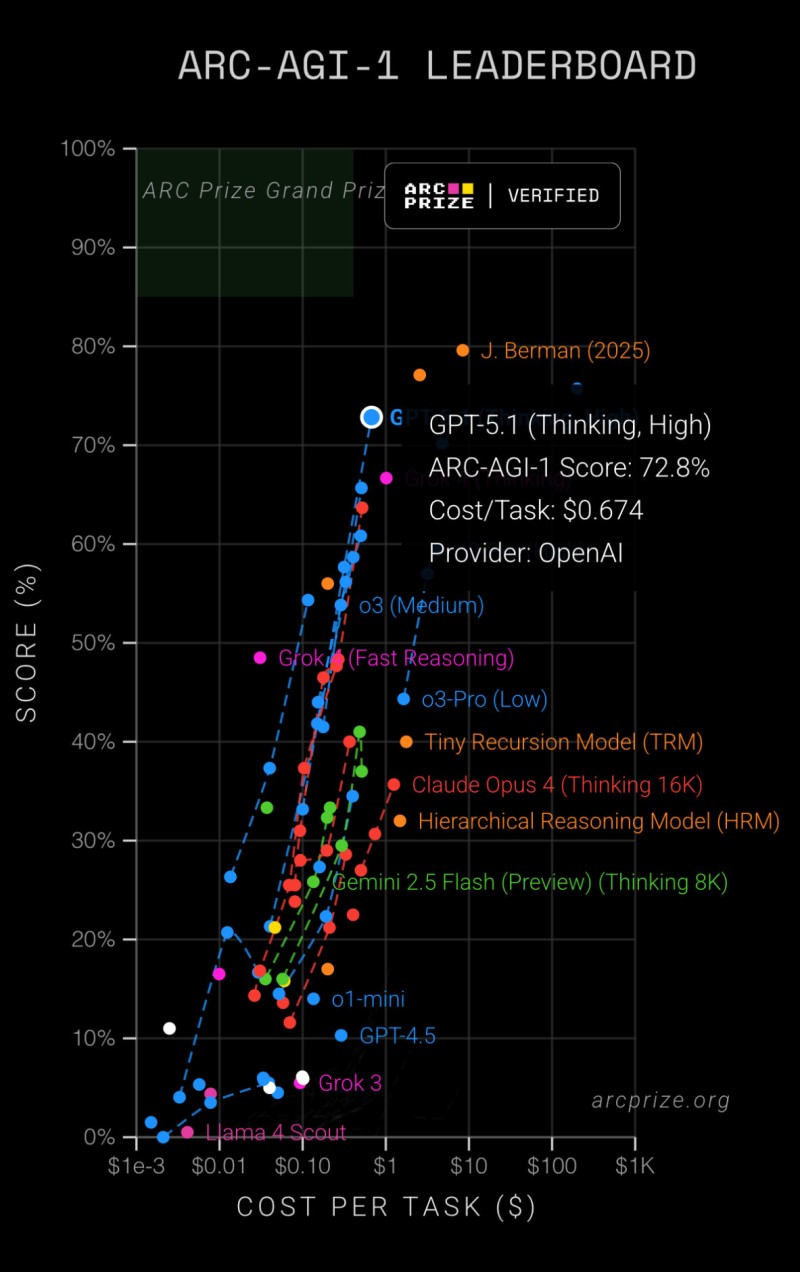

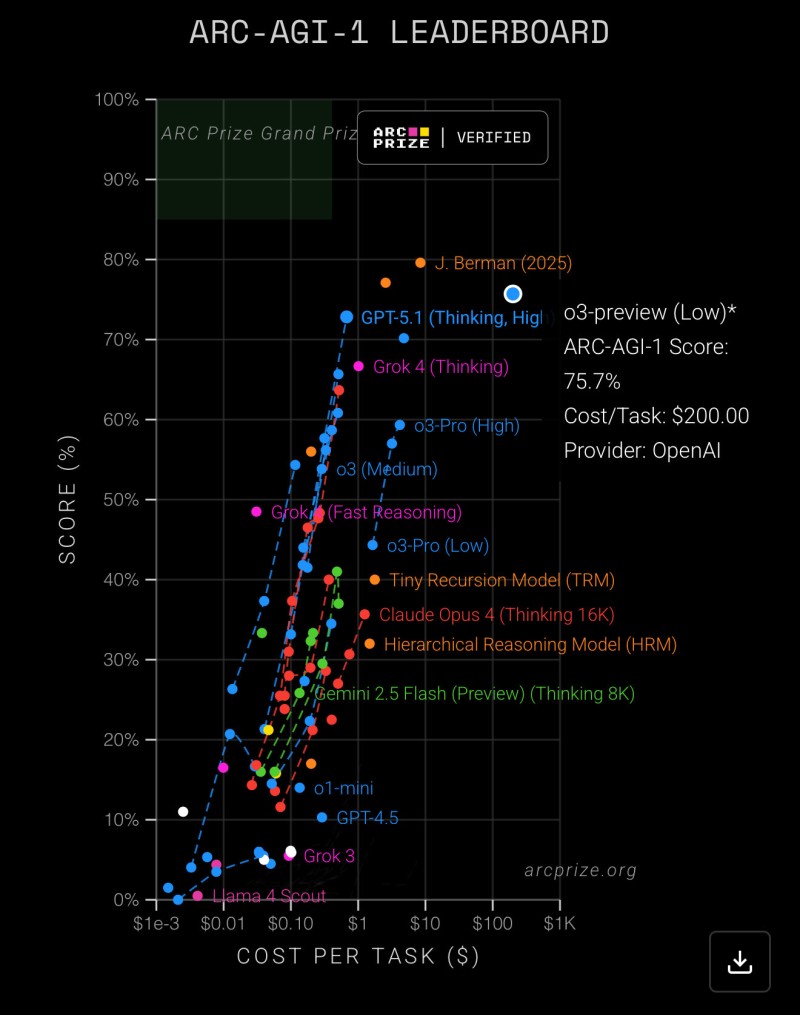

⬤ The ARC-AGI-1 leaderboard data backs this up. GPT-5.1 hit a 72.8% score at $0.674 per task, while o3-preview (Low) scored 75.7% at $200 per task. The performance gap is minimal despite the huge cost difference. Critics who've complained about expensive models are missing how rapidly AI economics are shifting—intelligence just became 300× more affordable in twelve months.

⬤ The benchmark chart positions both OpenAI models alongside other reasoning systems like Grok, Claude Opus, Tiny Recursion Model, and Gemini 2.5 Flash, showing how efficiency gains are accelerating industry-wide. GPT-5.1 sits in the sweet spot of high accuracy and low cost, marking a steep jump in capability-per-dollar. Altman's reaction confirms that even people building these models didn't anticipate such a rapid cost drop in a single development cycle.

⬤ This sharp cost decline signals a fundamental shift in AI development economics. When reasoning performance becomes drastically cheaper to deploy, adoption can scale faster across automation, research, consumer tools, and complex decision systems. A 300× reduction could completely reshape how organizations budget for AI, plan deployments, and think about long-term strategy across the ecosystem.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi