⬤ Researchers at Hefei University of Technology have cracked a major problem in AI reasoning by teaching models to use their own internal "sense" of truth while working through complex problems. This breakthrough helps AI dynamically choose the most reliable path forward during step-by-step logical thinking, marking a significant improvement over current methods.

⬤ The innovation tackles one of AI's biggest weaknesses: losing accuracy or going completely off track during multi-step reasoning. While traditional approaches like Self-Consistency often stumble when maintaining precision across multiple reasoning stages, this new technique leverages the model's hidden cognitive processes to stay on course. Testing has proven it consistently beats conventional methods across mathematical reasoning, symbolic logic, and everyday problem-solving scenarios.

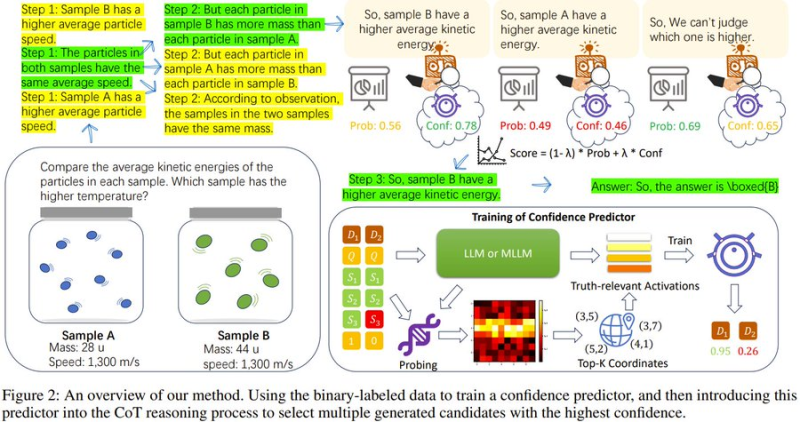

By using this innovative model of dynamic decision-making based on an internal sense of truth, AI can more effectively navigate complex decision trees, the researchers noted in their findings.

⬤ What makes this approach particularly powerful is how it lets AI navigate tricky decision trees without making the common mistakes that come from poorly guided reasoning. The method works like an internal compass, keeping the model grounded in logical truth even when processing complicated, branching problems.

⬤ Beyond academic labs, this could reshape how industries use AI for critical decisions. Financial analysis, medical diagnostics, and other fields requiring precise logic could see more reliable AI-driven solutions. As AI reasoning becomes more trustworthy and adaptable, we're looking at a future where these models don't just perform well—they actually stay within the bounds of logic and truth while doing it.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah