⬤ A wave of AI techniques is capturing attention as essential tools for advanced models approaching 2025. The landscape includes methods spanning numerical precision, quantization, attention mechanics, and multimodal design—all pointing toward smarter resource management rather than just bigger models.

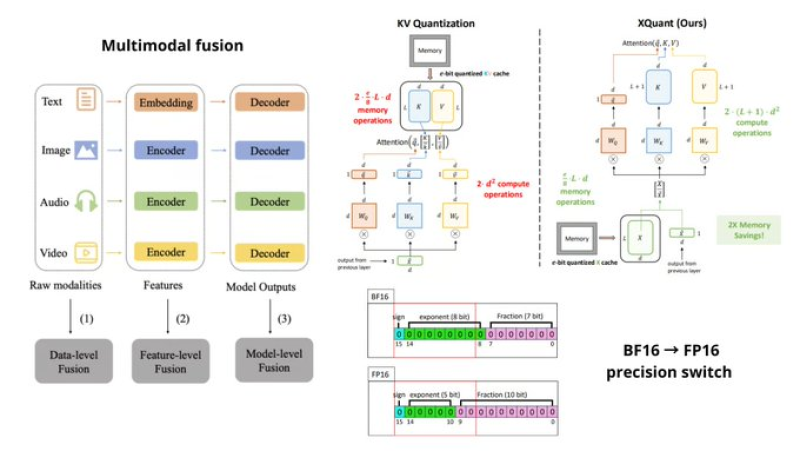

⬤ Precision management sits at the heart of this shift. Switching between BF16 and FP16 precision lets engineers balance stability with speed depending on whether they're training or running inference. Quantization methods like XQuant and XQuant-CL tackle attention key-value storage head-on, cutting memory use while keeping model behavior intact. KV quantization restructures how attention computations work, shrinking cache requirements—crucial as context windows and model sizes keep climbing.

⬤ Structural innovation drives the other major trend. Multimodal fusion is moving past basic feature stacking toward modular frameworks. Mixture of States lets models blend text, image, audio, and video representations with real flexibility. Mixture-of-Recursions reuses computational paths across layers for better efficiency, while Causal Attention with Lookahead Keys tweaks attention flow to peek at limited future context without breaking causal rules.

⬤ This matters because AI engineering priorities are shifting hard. Instead of just scaling up, researchers are optimizing precision handling, memory footprints, and structural composition. As multimodal systems and long-context models become standard, these techniques—precision switching, advanced quantization, modular fusion—are defining the next generation of AI through better scalability, efficiency, and real-world deployment capability.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi