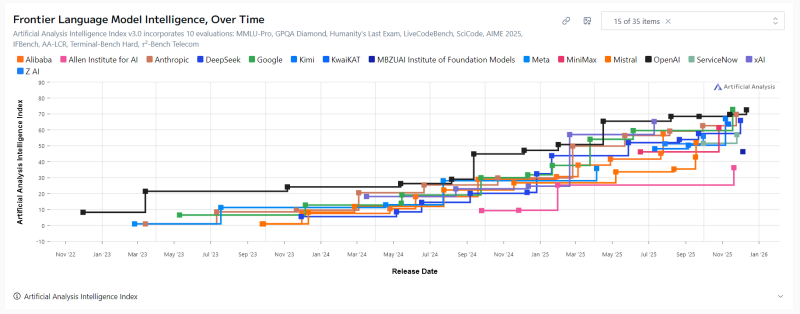

⬤ OpenAI has solidified its position at the top of frontier language model intelligence in 2025, based on fresh data from Artificial Analysis that tracks performance across ten advanced benchmarks including MMLU-Pro, GPQA Diamond, LiveCodeBench, and Humanity's Last Exam. The analysis reveals OpenAI's lead has actually tightened this year, even as competing models from other labs catch up in basic pre-training capabilities.

⬤ The data shows steady improvements across the entire AI ecosystem from late 2023 through 2025, with major players like Google, Anthropic, Meta, and xAI all posting higher aggregate scores. But OpenAI's performance consistently stays ahead of the pack, especially from mid-2024 forward. By late 2025, OpenAI models dominate the top range of the intelligence index, showing stronger combined results in reasoning, coding, and scientific problem-solving. The gap between leading models has narrowed considerably, though OpenAI maintains its edge.

⬤ This convergence happens because top labs now use similar pre-training approaches. The real competitive advantage has shifted to post-training techniques, particularly reinforcement learning. GPT-5.2 reportedly has unique strengths that don't show up in typical demos but become clear in deeper evaluations. The incremental improvements in OpenAI's benchmark performance suggest progress driven more by smart training strategies than simply building bigger models.

⬤ The industry is entering a new phase where longer-horizon, agent-like reasoning matters most. Future AI leadership will likely depend less on how many parameters a model has and more on how well it plans, reasons, and acts over complex, extended tasks. This transition means the next wave of AI advancement will be shaped by training methodology and behavioral capabilities rather than raw computational scale.

Usman Salis

Usman Salis

Usman Salis

Usman Salis