⬤ HSBC Global Investment Research just dropped some eye-opening numbers about what it'll actually cost OpenAI to keep scaling its AI operations. The bank's looking at OpenAI like it's basically a massive compute utility that needs to rent around 36 gigawatts of cloud power from Microsoft and Amazon. Even if everything goes perfectly—subscription growth, ad revenue, the whole nine yards—there's still a roughly $207 billion hole that needs filling to keep the infrastructure running.

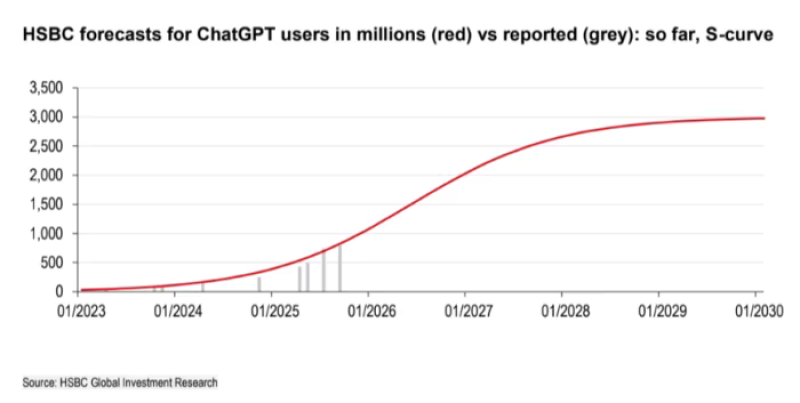

⬤ The really staggering part? Total data-center commitments could hit $1.8 trillion down the road. That's what you need when you're training and running massive AI models at scale. HSBC's chart shows ChatGPT usage following a sharp S-curve, potentially reaching 3 billion users by 2030. But here's the catch: even with that kind of adoption, the economics are brutal. You're looking at enormous upfront costs for compute power that dwarf anything we see in traditional software.

⬤ Competition's making things worse too. Every major AI company is racing to expand capacity, which means OpenAI's spending profile looks more like a utility company than a tech startup. Despite these massive numbers, HSBC's actually bullish on AI overall. Their thinking? If AI delivers even small productivity gains across the global economy, today's "unreasonable" spending levels might actually make sense in the long run.

⬤ What this really shows is the growing disconnect between how fast people are adopting AI and what it costs to support that adoption. The $207 billion gap isn't just OpenAI's problem—it's a signal of what's coming for the entire AI industry. How companies tackle these infrastructure costs will likely shape everything from cloud pricing to chip demand over the next decade.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah