⬤ NVIDIA just released Llama-3 Nemotron Super-49B-v1.5 on Hugging Face, marketing it as a highly efficient reasoning model built for agentic workflows and advanced chat applications. The system features a 128K context window and uses breakthrough NAS optimization to improve accuracy and throughput, marking another milestone in the company's open-model ecosystem.

⬤ The momentum faces potential headwinds from Washington tax proposals targeting capital-intensive AI compute. Higher taxes on GPU clusters could squeeze AI developers financially, potentially forcing smaller labs into bankruptcy and driving talent to countries with friendlier regulations. Since models like Nemotron Super-49B-v1.5 require massive compute resources, these tax changes would directly impact training and deployment costs.

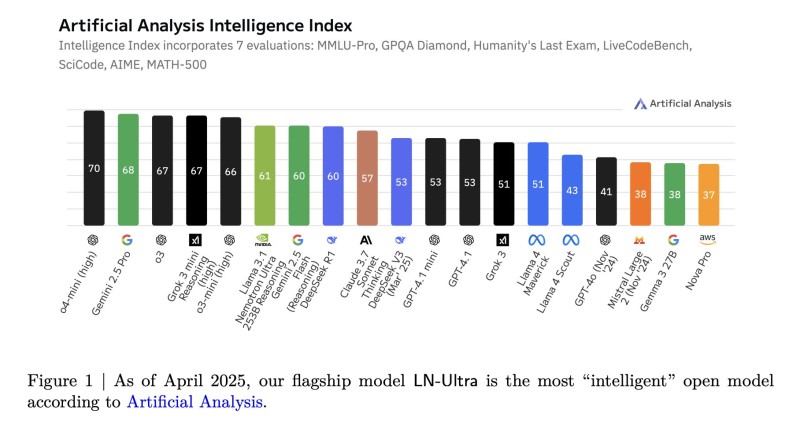

⬤ The new Nemotron model was engineered for exceptional efficiency across reasoning tasks, with its 128K context architecture enabling stronger agentic performance. Benchmarks from Artificial Analysis show related Llama-3.1 Nemotron Ultra scoring 61 on the Intelligence Index, demonstrating solid reasoning capabilities within NVIDIA's open-model lineup and positioning these systems among the most capable open models available.

⬤ As open-source AI competition heats up, this release signals NVIDIA's strategic push toward more powerful reasoning models with optimized architectures and longer context windows. The combination of NAS-driven efficiency and strong benchmark performance shows NVIDIA's commitment to expanding its influence across open-model communities and enterprise markets. However, potential tax changes could reshape innovation economics at this critical moment, affecting how widely next-generation reasoning systems like Nemotron Super-49B-v1.5 can scale.

Peter Smith

Peter Smith

Peter Smith

Peter Smith