The AI landscape is experiencing a notable shift in market dynamics. Recent data from Vercel's AI Gateway reveals that Anthropic's Claude models have captured the majority share of real-world AI usage, marking a significant moment in the evolution of large language model adoption. This milestone reflects changing priorities among developers and enterprises who are increasingly favoring efficiency, reliability, and practical performance over raw model size. The data provides insight into actual production usage patterns, showing which models teams trust when building customer-facing applications and mission-critical systems.

Claude Takes the Lead

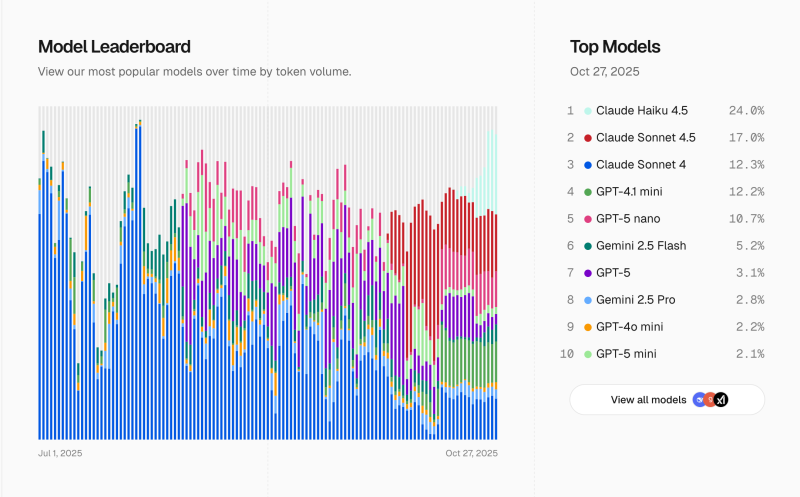

According to the latest leaderboard shared by Vercel CEO and trader Guillermo Rauch, the real-world usage data tells a compelling story about which AI models are actually winning in production environments.

As of late October 2025, the AI model usage breakdown shows:

- Claude Haiku 4.5 leads with 24% of total token volume

- Claude Sonnet 4.5 captures 17%

- Claude Sonnet 4 accounts for 12.3%

- OpenAI's GPT-4.1 mini holds 12.2%

- GPT-5 nano represents 10.7%

- Google's Gemini 2.5 Flash sits at 5.2%

- GPT-5 flagship model has just 3.1%

- Google's Gemini 2.5 Pro trails at 2.8%

Combined, the three Claude models represent more than half of all AI usage tracked through Vercel's infrastructure. This dominance is particularly pronounced among agent developers, startups, and enterprise teams building production-ready AI systems. The data suggests that users are gravitating toward smaller, faster models that deliver strong performance at lower operational costs rather than flagship releases.

What's especially striking is how the smaller Claude Haiku model has emerged as the clear leader, indicating that developers are finding exceptional value in lightweight, efficient models that can handle high-volume workloads without the overhead of larger alternatives. This pattern represents a fundamental shift from the "bigger is better" mentality that dominated AI development in previous years.

What's Driving Claude's Adoption

Anthropic's surge reflects a broader shift in what developers value most. Instead of chasing the biggest models, teams are prioritizing speed, extended context windows, and consistent outputs. Claude's models excel in these areas, offering strong reasoning capabilities alongside longer context lengths and robust safety alignment protocols.

These features make Claude especially attractive for businesses deploying large-scale AI agents and workflow automation systems where reliability matters as much as intelligence. The usage data captures real production environments beyond coding tools, showing how LLMs perform when integrated into customer-facing applications and backend systems where low latency and stable behavior are critical.

The Evolving AI Ecosystem

Claude's rise highlights an important evolution in the market. Enterprises are no longer betting everything on a single AI provider. Instead, they're building multi-model architectures that blend Claude, GPT, and Gemini based on specific use cases and requirements. OpenAI maintains solid performance with its lightweight GPT variants, which remain popular for certain applications. Google's Gemini series continues to find its place in multimodal scenarios that combine text, vision, and code. However, Anthropic appears to have hit the sweet spot between intelligence, dependability, and operational efficiency, giving the company a clear competitive advantage in production deployments.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi