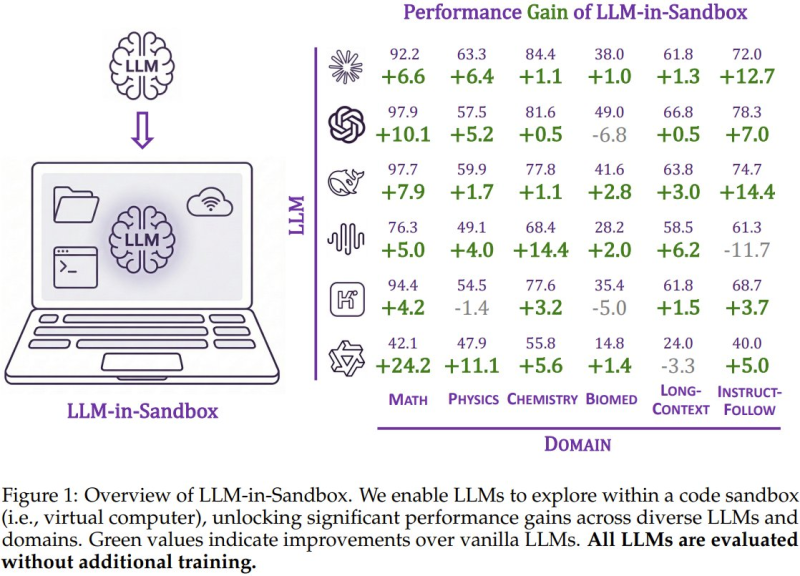

⬤ Microsoft teamed up with Renmin University and Tsinghua University to create LLM-in-Sandbox, a framework that basically gives language models their own virtual computer to play around in. The system lets these AI models browse files, run code, and mess with tools just like you or I would on a regular computer. This means they can tackle problems they've never been specifically trained to handle.

⬤ The results speak for themselves. Testing showed solid performance jumps across math, physics, chemistry, biomedical reasoning, understanding long texts, and following instructions. Math tasks saw improvements of around 6.6 to 10.1 points depending on which model was used, while instruction-following capabilities jumped by roughly 12.7 points. What's interesting is these gains showed up across different AI architectures, not just one specific model.

⬤ Here's the kicker: these improvements happened without any additional training. Instead of the usual fine-tuning approach, models use coding tools inside their virtual machine to test solutions and refine their answers through trial and error. The research team sees this as a step toward what they call general agentic intelligence—where the AI actually plans out actions and executes steps instead of just spitting out text.

⬤ This work signals a real shift in how AI systems operate. By letting models directly interact with computational environments, LLM-in-Sandbox expands what AI can actually accomplish and opens doors for broader use in automation and software-assisted problem-solving.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah