⬤ ByteDance and Zhejiang University just dropped OpenVE-3M, a large-scale dataset built specifically to train AI systems to edit videos using plain English instructions. The dataset helps AI models grasp and execute detailed editing requests—everything from style adjustments to adding objects—while keeping the original video's structure intact. The team also unveiled OpenVE-Edit, a 5-billion-parameter model that uses this dataset to achieve record-breaking performance in instruction-guided video editing.

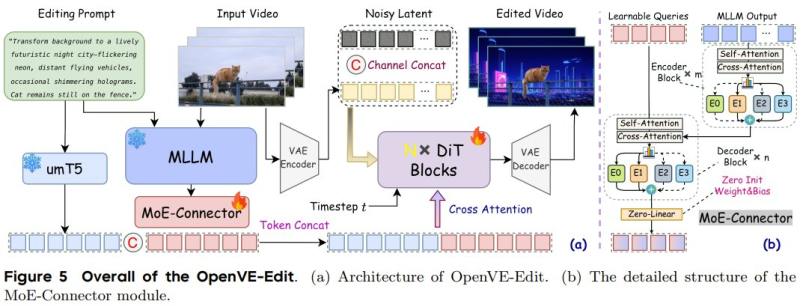

⬤ OpenVE-3M lets models modify videos based entirely on written descriptions. Someone could ask for a futuristic cityscape backdrop while leaving the main subject untouched, and the AI delivers. OpenVE-Edit pulls this off using a mixture-of-experts connector, multimodal language processing, and diffusion-based video generation. The system blends video frames, user instructions, and latent representations to keep edits smooth and consistent throughout every frame.

⬤ The research shows OpenVE-Edit beat previous models on a new human-aligned benchmark measuring how well AI follows instructions, visual quality, and frame-to-frame stability. This benchmark reflects what people actually expect from AI video editing tools rather than relying on artificial test scores. The work also brings more standardized evaluation methods to instruction-guided video editing—an area that's been exploding but lacking consistent testing frameworks.

⬤ The release of OpenVE-3M and OpenVE-Edit marks another leap forward in multimodal AI capabilities. As models get better at understanding and acting on natural-language prompts, AI's role in creative work keeps expanding. This development points toward AI editing tools becoming dramatically more powerful and user-friendly, which could completely transform how content gets made across entertainment, media, and digital production.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi