Anthropic believes it could achieve "powerful AI" — systems that can independently handle complex engineering tasks — by early 2027. The projection, based on METR data, tracks how quickly AI systems have improved since GPT-3.5 and extends that trend forward.

A recent analysis suggests this timeline might be too ambitious, arguing that true engineering autonomy involves challenges beyond just scaling computing power or improving benchmarks.

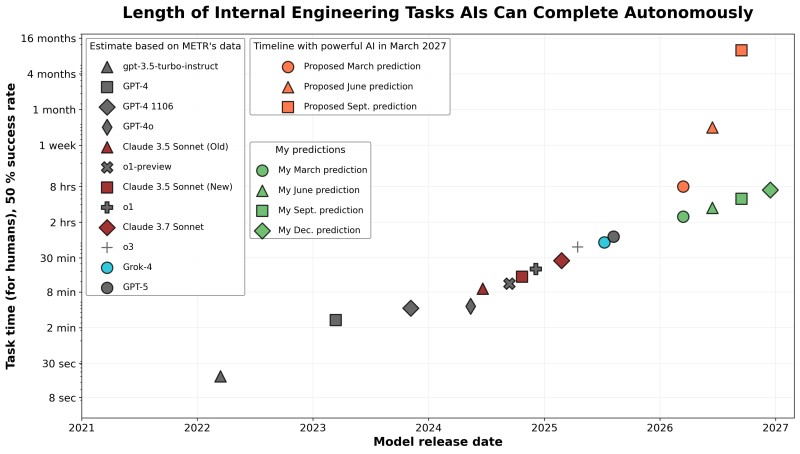

Chart Breakdown: How Fast AI Is Closing the Human Gap

The visualization, shared by AI researcher Ryan Greenblatt, measures AI performance against human benchmarks — how long a task would take a human to complete with 50% success rate.

The trajectory is dramatic:

- GPT-3.5-Turbo-Instruct (2022) — tasks taking humans seconds

- GPT-4 and GPT-4-1106 (2023–2024) — tasks requiring minutes

- Claude 3.5, Claude 3.7, OpenAI's o1 and o3, xAI's Grok-4 — by mid-2025, work equivalent to hours

- GPT-5 (2025) — multi-hour complex tasks, suggesting real autonomy

Anthropic's forecasts go further: by March 2027, tasks requiring a week of human work; by mid-2027, tasks taking humans months. Greenblatt's predictions push these milestones back six to twelve months.

The Meaning Behind "Powerful AI" in This Context

When Anthropic talks about "powerful AI," they're not claiming they'll achieve AGI. Instead, they mean models that can perform autonomous internal engineering — writing code, debugging, and maintaining codebases without human supervision. This represents a shift from today's AI assistants to actual autonomous agents operating as independent engineering team members.

If realized, it could create a self-reinforcing loop where AI systems improve themselves, accelerating development exponentially. That's what makes Greenblatt cautious — recursive self-improvement is unpredictable and difficult to control.

Why Experts Doubt the 2027 Timeline

Greenblatt's skepticism is grounded in practical challenges. While scaling laws suggest steady progress, real bottlenecks exist. Current models struggle with consistency in open-ended scenarios. Long-term projects require persistent memory and state tracking — capabilities still in early development. Training constraints are growing as labs face GPU shortages and infrastructure limits. High-quality training data is running out, forcing reliance on synthetic alternatives with their own complications.

Greenblatt argues that Anthropic's projections assume a smooth exponential curve that doesn't account for the practical friction emerging when pushing beyond current designs.

Implications: The 2027 "AGI Window"

If Anthropic's forecast holds, the next two years would see AI evolve from assistants into near-autonomous engineers. This would reshape the relationship between AI research, software development, and human labor.

Even optimists acknowledge that governance and safety measures must advance in parallel. Without control frameworks, powerful self-directing AI could create unforeseen coordination and ethical challenges.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi