⬤ Xiaomi just dropped HyperVL, a fresh multimodal model designed to make edge AI work better on phones and mobile devices. The HyperAI team built this specifically to tackle the biggest headaches with vision-language models: they eat up too much memory and run too slow on actual hardware. HyperVL aims to fix both problems while keeping performance competitive on multimodal tasks.

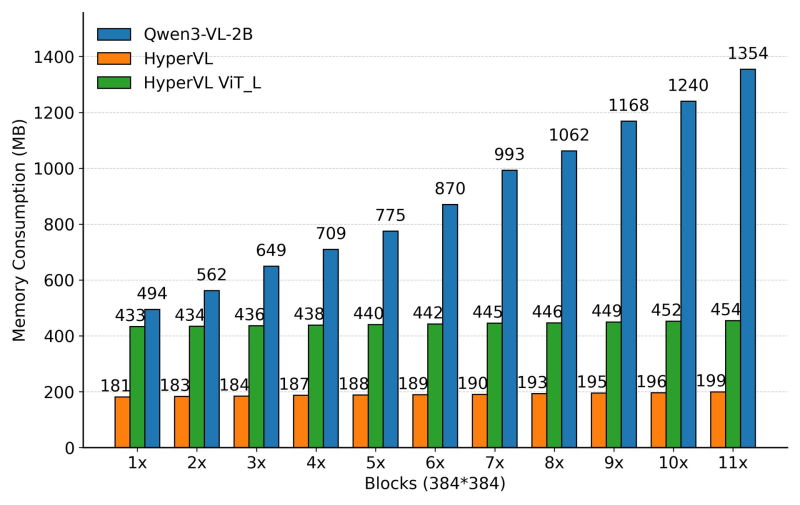

⬤ The data shows HyperVL absolutely crushing it on memory efficiency compared to Qwen3-VL-2B. While the baseline model's memory usage shoots up dramatically as you add more visual blocks, HyperVL stays remarkably steady—never breaking 200 MB even at higher resolutions. In the best-case scenarios, HyperVL reduces peak memory consumption by up to 6.8x, which is a massive improvement for devices with limited resources.

⬤ How does it work? Two key tricks: adaptive visual resolution and dynamic encoder switching. Instead of processing everything at the same resolution regardless of what the task actually needs, HyperVL adjusts on the fly. The dynamic encoder switching lets the system find the sweet spot between accuracy and speed in real time. These tweaks let HyperVL deliver near state-of-the-art results while actually being practical for on-device use.

⬤ This launch fits into the bigger picture of companies pushing AI processing onto devices instead of relying on cloud servers. For Xiaomi (1810.HK), getting edge AI right means they can build smarter features into phones, wearables, and IoT devices without the lag or privacy concerns of cloud processing. As memory and speed become make-or-break factors for AI on consumer hardware, models like HyperVL could define who wins in the next generation of smart devices.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi