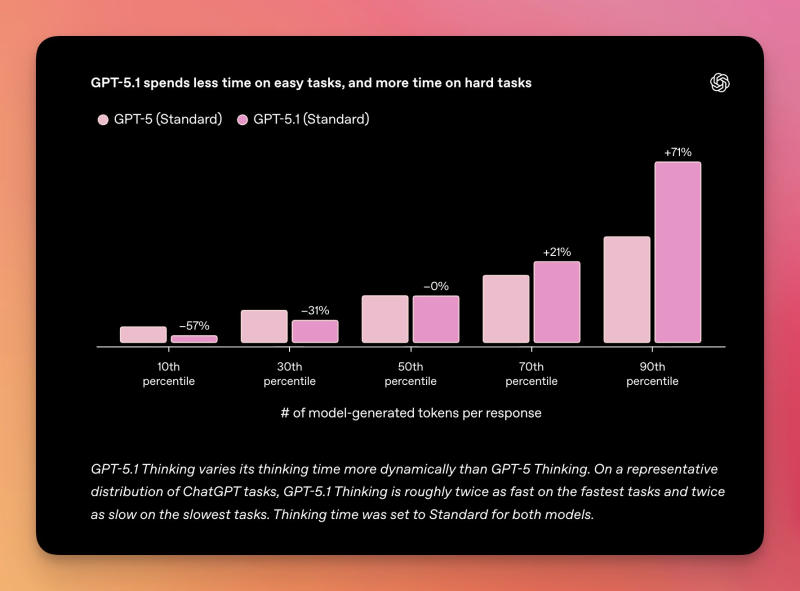

⬤ OpenAI just rolled out GPT-5.1, and it's a genuine step forward in how language models handle different types of problems. Unlike older systems that treated every question the same way, this one actually figures out whether it needs to think hard or move fast. The data shows GPT-5.1 spending 57% less time on the easiest tasks compared to GPT-5, while investing 71% more effort on the toughest ones.

⬤ The benchmark results paint a clear picture of how the model redistributes its focus. For moderately easy tasks at the 30th percentile, thinking time drops by 31%. Around the middle of the difficulty range, performance stays roughly the same as GPT-5. But once you hit harder problems at the 70th percentile, GPT-5.1 commits 21% more processing time. OpenAI calls this adaptive reasoning—basically, the model can evaluate how much thought a problem actually needs before diving in.

⬤ This represents a real shift from how earlier AI worked. Previous generations mostly reacted to prompts and predicted what came next. GPT-5.1 can pause, assess the complexity, and adjust its approach accordingly. The numbers back this up: simple stuff gets handled faster, while complicated requests get the deeper analysis they require.

⬤ Adaptive reasoning changes what we can expect from advanced AI systems. By speeding up routine responses and going deeper on challenging work, GPT-5.1 sets a new standard for both efficiency and capability. As AI takes on more demanding applications across industries, models that manage their own reasoning could reshape how we think about performance and scalability in practice.

Usman Salis

Usman Salis

Usman Salis

Usman Salis