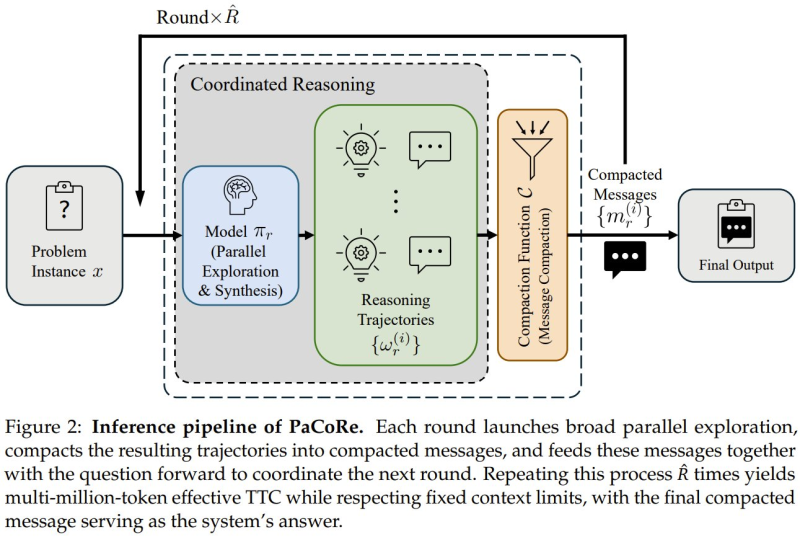

⬤ A breakthrough inference framework called PaCoRe (Parallel Coordinated Reasoning) is changing how AI models tackle complex problems. Rather than working through challenges one step at a time, PaCoRe fires up hundreds of parallel reasoning paths simultaneously in each round, distills their key insights into compact messages, and uses these summaries to steer the next rounds. This coordinated exploration and message compression cycle repeats until reaching a final answer.

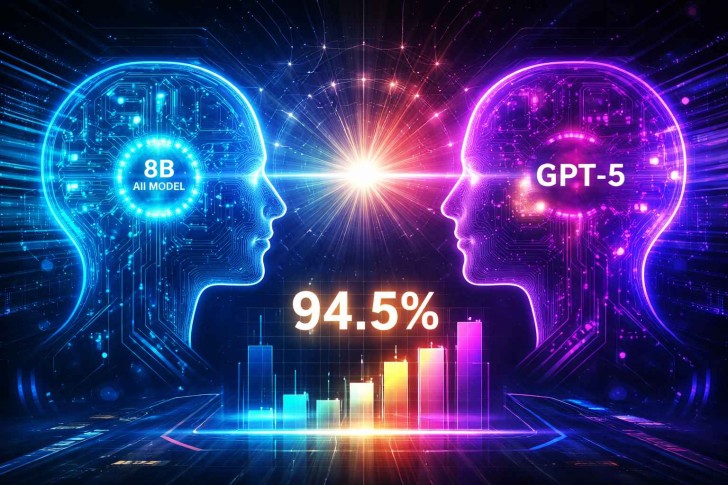

⬤ PaCoRe lets models scale up their reasoning power to millions of tokens during testing without hitting context window limits. This means smaller models can tap into far more reasoning capacity than traditional methods allow. The results speak for themselves: an 8B-parameter model running PaCoRe scored 94.5% on the HMMT 2025 mathematics competition, edging past GPT-5's 93.2% on the same test. It's proof that how you reason matters just as much as model size.

⬤ What makes this work is how PaCoRe compresses parallel reasoning into short guiding messages, dodging the context bloat that plagues long sequential chains of thought. This allows the system to run multiple reasoning cycles without breaking through context limits, delivering strong performance on tough mathematical problems where conventional approaches typically struggle.

⬤ This matters because it opens a new path for advancing AI reasoning beyond just building bigger models or expanding context windows. Smart scaling of test-time compute could reshape how future models are designed and deployed, especially for tasks demanding precision. As focus shifts toward optimizing what happens during inference, PaCoRe shows how clever algorithms can unlock unexpected capabilities in smaller, leaner models.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi