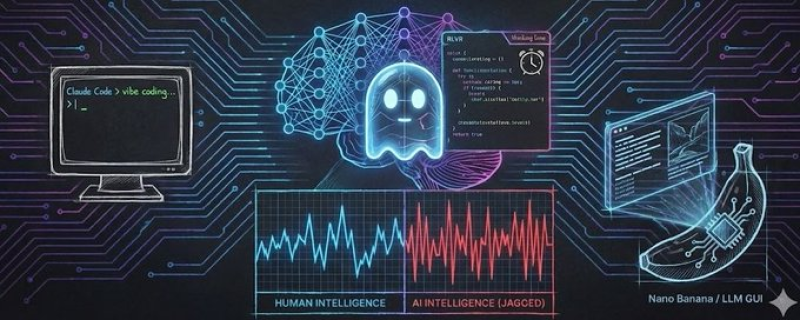

⬤ A 2025 analysis is capturing widespread attention for offering a clearer picture of how large language models actually work. Instead of viewing AI systems as moving toward human-like thinking, the framework describes them as "ghosts" shaped by completely different forces. These aren't biological minds—they're tools optimized through training that rewards pattern matching, problem solving, and measurable performance.

⬤ The core insight centers on what drives capability. Human intelligence developed under survival and social pressures. Large language models train on text prediction, structured problem solving, and benchmark performance. This gap explains why models ace professional exams, generate sophisticated analysis, or crack competition-level math problems, then turn around and hallucinate facts or stumble on straightforward reasoning. Systems like GPT-5, Claude, and Gemini show razor-sharp abilities in verifiable areas while remaining fragile everywhere else.

These systems are not biological minds but artifacts optimized through training objectives that reward imitation, problem solving, and verifiable success.

⬤ This perspective matters for anyone building with AI. Teams that assume uniform intelligence hit walls when deploying models into actual workflows. The better question: does your task live near a verifiable domain where reinforcement learning concentrates its power? If yes, AI can match or beat human performance. If not, you need guardrails and human oversight. Tools like Cursor and Claude Code already design around these uneven boundaries rather than treating AI as one-size-fits-all intelligence.

⬤ The real shift is in how we think about AI progress. Models aren't getting universally smarter across every task. Intelligence here is jagged and domain-specific. That explains the confusion around what AI can and can't do, and gives us a sharper lens for understanding why breakthroughs look dramatic in some spaces and limited in others.

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova