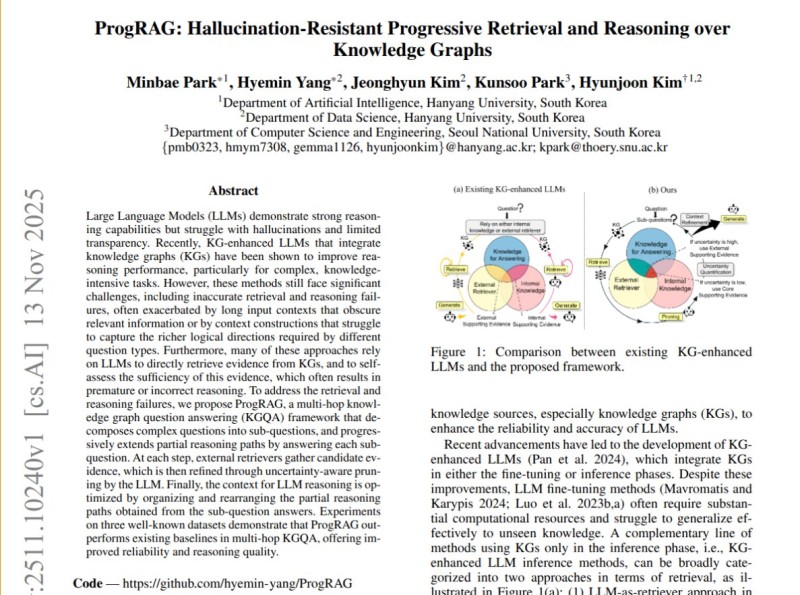

⬤ South Korean researchers have unveiled ProgRAG, a framework that makes large language models more trustworthy when tackling knowledge-graph questions requiring multiple reasoning steps. The system cuts down on hallucinations by building evidence piece by piece, helping the model trace connected facts across several hops. The research paper, titled "ProgRAG: Hallucination-Resistant Progressive Retrieval and Reasoning over Knowledge Graphs," includes diagrams showing how this method stacks up against existing approaches.

⬤ ProgRAG works by breaking complex questions into smaller sub-questions tied to key entities. At each step, it pulls in relationships around the current entity and filters them using relation cues pulled straight from the original question. The framework tackles a common problem: many knowledge-graph-enhanced LLMs still trip up on inaccurate retrieval and reasoning failures, especially when dealing with lengthy or messy contexts. ProgRAG handles this by ranking candidate triples through text similarity and a graph neural network, keeping only the most relevant evidence.

⬤ Before committing to a retrieved hop, ProgRAG checks token-level uncertainty. When confidence drops, it expands exploration rather than locking into an early path that might be wrong. After mapping out reasoning paths, the system lists partial prefixes and reorganizes them into an ordered context for the LLM to verify logical constraints. This stops long, noisy inputs from piling up errors and prevents early slip-ups from snowballing into incorrect final answers.

⬤ Testing across three datasets showed ProgRAG boosting accuracy with a 10.9% gain on CR-LT. The framework performed especially well on tricky question types like conjunctions and superlatives. It also runs on smaller models without any fine-tuning, showcasing ongoing efforts to make LLM reasoning more dependable when structured, multi-hop evidence chains are needed.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi