Artificial intelligence has made a remarkable leap toward reading the human mind. A groundbreaking study shows an AI system that can translate brain activity into clear, natural-language descriptions—not just for images and videos someone is watching, but even for scenes they're simply imagining. This development represents a major advance in both neuroscience and AI research, pushing the boundaries of how machines can understand human thought.

A study published on November 5, 2025, in Science Advances introduces "mind captioning"—a technique that decodes fMRI brain signals into structured text describing what a person is perceiving or imagining. The research team, led by Tomoyasu Horikawa at NTT Communication Science Laboratories in Japan, demonstrates how AI can generate surprisingly accurate sentences based purely on neural activity.

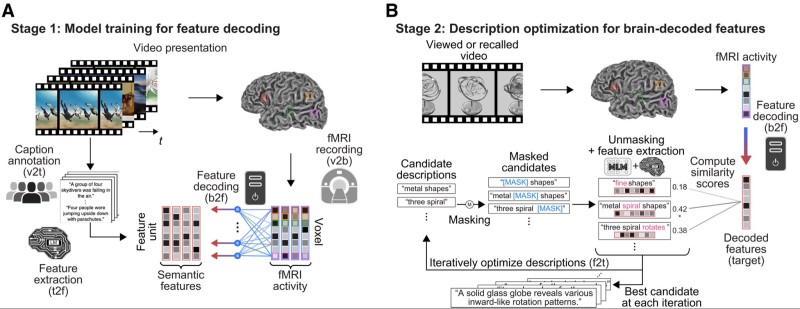

2-Stage Decoding: How the System Works

The system can produce sentences like "A bird eats a snake in the grass" while someone watches a video—and incredibly, even when they're just recalling it from memory. This goes well beyond earlier attempts at neural decoding, which struggled to move past identifying single objects to capturing actions and relationships between things.

The decoding process happens in two stages. First, linear models map fMRI patterns to semantic features using DeBERTa-large, a powerful language model. These representations capture subtle meaning—not just the objects involved, but how they relate to each other. The second stage uses a masked language model (RoBERTa-large) to refine an initially blank caption through iteration. Over 100 cycles, the model masks words, predicts new ones, scores them against brain-derived features, and evolves the text. This transforms rough attempts like "metal shapes" into coherent descriptions such as "A blacksmith hammers hot metal on an anvil."

50% Accuracy: Beyond Database Retrieval

What sets this system apart is its ability to generate completely new text directly from brain signals rather than pulling captions from a database or relying on image-processing models. In testing, it achieved around 50% accuracy when selecting the correct video description from 100 options using only captions. Even more impressively, the model worked across perception and imagination, reaching up to 40% accuracy when participants recalled scenes from memory.

The research builds on decades of work exploring Broca's and Wernicke's brain regions, which shape inner speech and how we build cognitive narratives. The implications are massive: potential communication tools for people who can't speak, neuro-adaptive creative applications, clinical uses for cognitive disorders, and deeper insights into how the brain organizes thoughts. At the same time, this technology raises important questions about brain-data privacy, consent, and ethical protections.

Peter Smith

Peter Smith

Peter Smith

Peter Smith