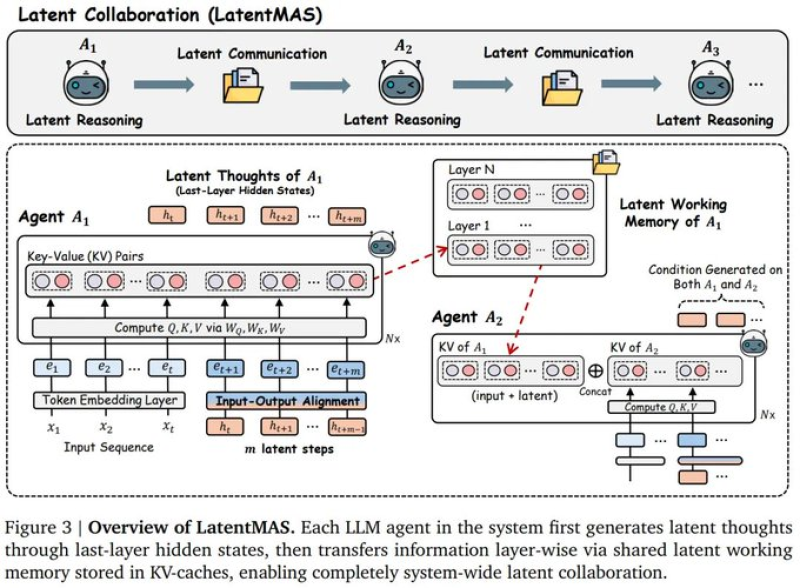

⬤ A fresh research framework called LatentMAS is changing how AI agents talk to each other—and they're doing it without actually talking at all. Instead of passing messages back and forth in text form, these agents share their internal "thoughts" through latent representations. Think of it like agents reading each other's minds rather than sending emails. The system lets them exchange hidden states directly, coordinating at a much deeper level than traditional token-based communication.

⬤ The numbers tell an impressive story. LatentMAS slashes token usage by up to 83% while simultaneously improving task accuracy by 14.6% compared to conventional text-based approaches. What makes this even more remarkable is that researchers achieved these gains without any additional model training—just smarter architecture that reuses existing components like key-value caches and latent activations that models already generate during normal operation.

As one researcher noted in the study: "By bypassing repeated token generation and decoding, LatentMAS reduces computational overhead while preserving semantic information that would otherwise be lost or compressed through text-based exchanges."

⬤ The technical setup works through a shared working memory where each agent deposits its latent reasoning states. Other agents can then tap into this collective knowledge pool, pulling relevant information directly into their own thinking process. It's fundamentally different from traditional systems where agents must constantly translate their thoughts into words, send them, and then have receiving agents translate those words back into understanding.

⬤ This development signals where multi-agent AI is heading. As these systems grow larger and more collaborative, finding ways to cut communication overhead without sacrificing performance becomes critical. LatentMAS shows that letting agents collaborate at the representation level—rather than forcing everything through language—can deliver both efficiency and quality improvements. For researchers and companies building AI systems that need multiple agents working together, this approach opens up practical new pathways for scaling cooperation without burning through compute budgets.

Usman Salis

Usman Salis

Usman Salis

Usman Salis