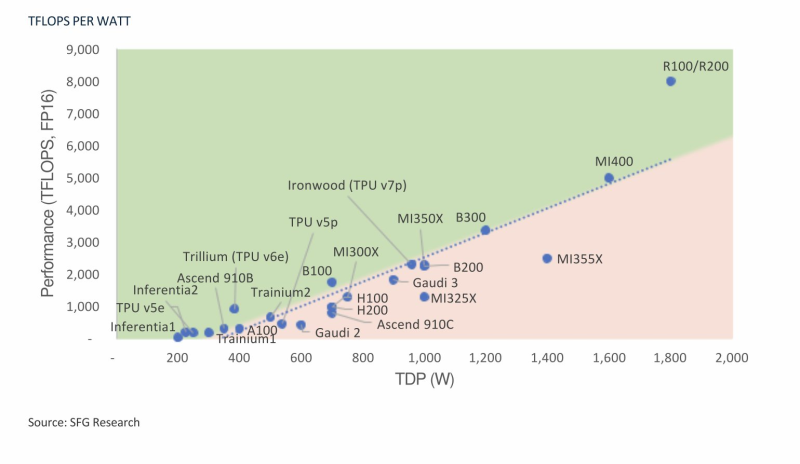

● NVIDIA's new Rubin architecture is turning heads in the AI hardware world. According to a recent post by OmerCheema, these chips have "broken the teraflops-per-watt trend in a very positive way." Fresh data from SFG Research backs this up, showing that the Rubin R100 and R200 GPUs blow past every previous efficiency record in AI hardware.

● Here's what makes this interesting: while competitors like AMD's MI400 series, Intel's Gaudi 3, and Google's TPU v7p are still following the traditional power-to-performance curve, NVIDIA's Rubin sits well above it. Simply put, Rubin squeezes way more computing power out of every watt of electricity, which means lower operating costs for data centers already struggling with energy bills.

● The timing couldn't be better. Data centers across the U.S. are now paying over $0.20 per kilowatt-hour in major tech hubs, while China is heavily subsidizing power for its AI infrastructure. Energy efficiency has become just as important as raw performance. By pushing past expected scaling limits, NVIDIA is essentially rewriting the rules for AI hardware, making both training and inference faster and more cost-effective for large language models.

● The financial impact is massive. Cloud giants like Microsoft Azure, Google Cloud, and Amazon AWS can now expand their AI capabilities without watching their energy costs skyrocket proportionally. Industry analysts believe Rubin's efficiency gains could cement NVIDIA's dominance in the data center GPU market, even as AMD and Google ramp up competition.

● As OmerCheema noted in his post, Rubin doesn't just represent another upgrade—it signals a fundamental shift in how efficiently GPUs can operate. If real-world benchmarks confirm these numbers, we might be entering an era where AI compute can grow faster than energy costs, something both investors and hyperscalers have been waiting for.

Usman Salis

Usman Salis

Usman Salis

Usman Salis