● User Ara recently pointed out something interesting: interleaved thinking is what really makes models like MiniMax M2 and Claude perform so well. The idea is simple but powerful—instead of wiping the model's memory clean after each tool call, you let it keep its reasoning traces: its plans, hunches, and intermediate thoughts.

● This preserved reasoning state is what makes these models reliable, especially when they're handling long chains of tasks or going through multiple rounds of testing and fixing code.

● Here's where things often go wrong: many developers don't send the model's reasoning traces back to it during agentic workflows. Without that context, the model basically starts from scratch each time, losing the thread of what it was thinking. This breaks down its understanding and leads to inconsistent results in multi-step tasks.

● Ara argues this is more important than just throwing more parameters at the problem—you need to maintain reasoning continuity to keep performance solid over long sessions. The biggest gains happen on multi-step, knowledge-heavy benchmarks—exactly where continuous reasoning matters most.

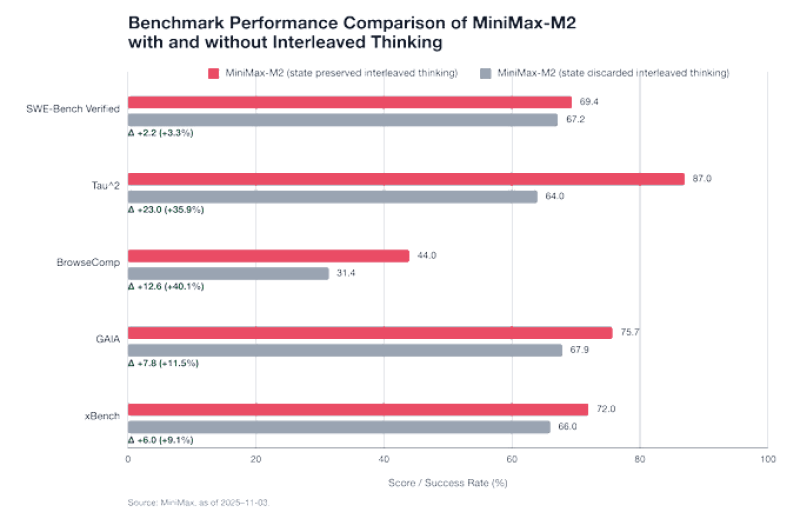

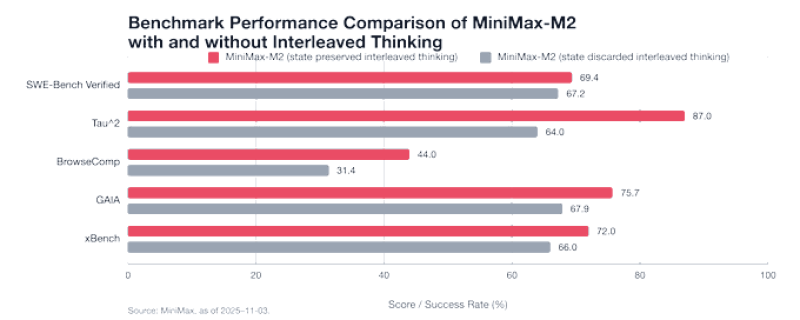

If you are using M2 and are happy with the results, please switch to interleaved thinking to get even better performance. MiniMax released benchmarks on November 3, 2025, and the results are striking. With interleaved thinking enabled, M2 shows significant jumps: - BrowseComp: +12.6 points (+40.1%)- Tau²: +23 points (+35.9%)- GAIA: +7.8 points (+11.5%)- xBench: +6 points (+9.1%)- SWE-Bench Verified: +2.2 points (+3.3%) As Ara put it:

● Ara's point is part of a bigger shift in AI thinking: the future isn't just about bigger models, it's about smarter memory management. This could change how we deploy reasoning models going forward.

Usman Salis

Usman Salis

Usman Salis

Usman Salis