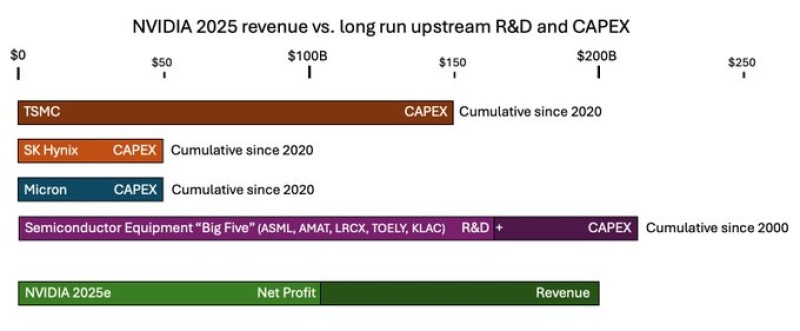

Their work reveals something startling: Nvidia's earnings in 2025 alone could match what the world's top semiconductor equipment makers spent on research and capital over the past 25 years combined. The piece goes further, exploring how this massive wave of spending — and the physical infrastructure needed to support it — might reshape everything from power grids to supply chains and even shift the global balance of power.

How Nvidia's Profits Are Rewriting Industrial Economics

The rise of artificial intelligence isn't just about smarter algorithms — it's triggering an industrial shift on a scale we haven't seen in decades. Dwarkesh Patel, host of The Lunar Society podcast and a sharp observer of AI economics, recently shared findings from a detailed analysis he co-wrote with @romeovdean that breaks down what's really happening beneath the surface of the AI boom.

The scale of what's happening is hard to grasp. The authors point out that with just one year of 2025 earnings, Nvidia could cover TSMC's entire capital spending from the last three years. That's not a typo — it's a sign of how radically the economics of AI have tilted.

Nvidia is projected to pull in around $200 billion in revenue this year, built on roughly $6 billion worth of depreciated chip-making capacity at TSMC. When you zoom out even further, Nvidia's annual revenue nearly equals a quarter-century of combined R&D and capital investment from semiconductor equipment giants like ASML, Applied Materials, and Tokyo Electron. If AI demand holds, this kind of momentum could fund an entirely new generation of chip fabrication plants and cement AI hardware as the backbone of industrial growth for decades.

Boom or Bust: Two Paths Forward

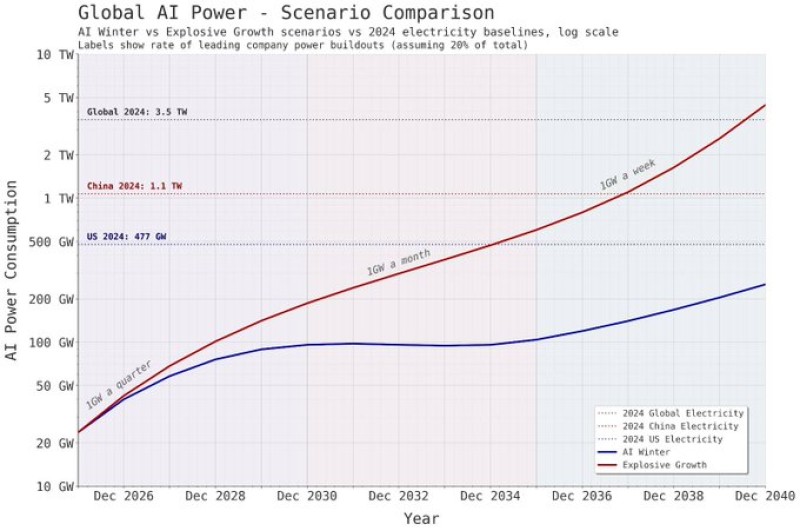

To stress-test where this could lead, the researchers modeled two very different futures running through 2040 — one where growth explodes, and another where things cool off like past AI winters.

In the high-growth scenario, annual AI capital spending could hit $2 trillion by 2030. That would mean the leading AI company reaches Sam Altman's goal of deploying one gigawatt of computing power every week by 2036. At that rate, AI's global electricity use would more than double what the entire United States consumes today. In the slower scenario, spending levels off and follows more traditional tech cycles. But even in that conservative case, AI infrastructure still becomes a dominant force — on par with energy and transportation in terms of capital intensity.

Either way, the conclusion is the same: AI growth is bumping up against real-world limits like energy availability, raw materials, and construction timelines.

The Overlooked Chokepoints in the Supply Chain

Beyond chips and data centers, there's a less glamorous but critical part of the story: the heavy industrial equipment that powers everything — copper wiring, gas turbines, transformers, and more. These industries run on 10 to 30-year depreciation schedules and operate on thin margins, moving far slower than AI's typical three-year hardware refresh cycle. That mismatch could become a serious bottleneck.

One straightforward fix the authors suggest is for hyperscalers like Google, Microsoft, and Amazon to pay higher margins to heavy equipment manufacturers to ramp up production faster. Since chips already eat up more than 60% of data center costs, even a small shift in spending could unlock significant manufacturing capacity. As economist Tyler Cowen likes to say, we shouldn't underestimate how flexible supply can be when the incentives are right.

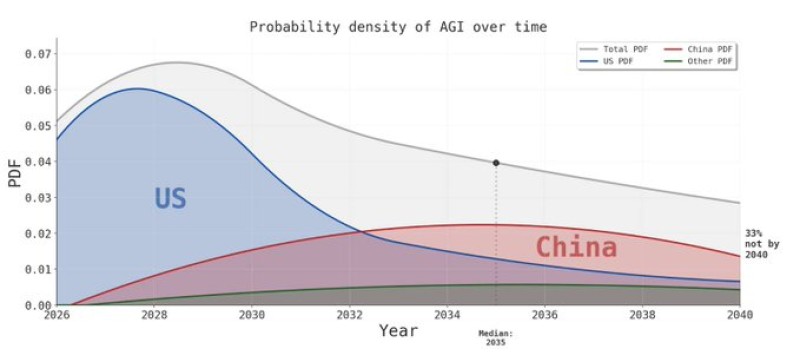

China's Position in the Long Race

The report also tackles geopolitics head-on, suggesting China might end up winning the AI race in the long run — especially if there's no major breakthrough before 2028. Since AI chips depreciate every three years or so, the competitive landscape essentially resets with each new generation of hardware. Once China catches up in chip manufacturing, the game shifts to something more like a marathon: a never-ending industrial contest fought across fabrication plants, supply chains, and energy production. China's integrated manufacturing base and willingness to back projects with state funding could give it a lasting advantage in this kind of slow-burn, high-scale competition where coordination and infrastructure matter more than early innovation alone.

Energy: The Real Limiting Factor

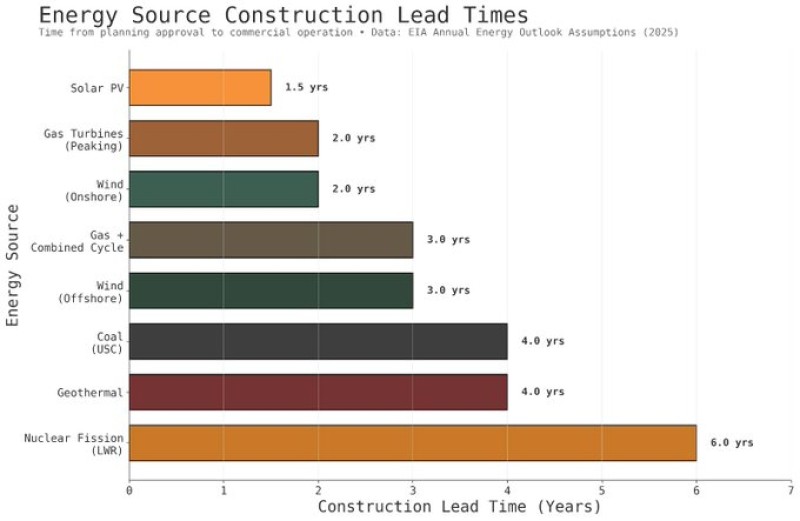

Maybe the most pressing insight from the analysis is about energy lead times — how long it takes to bring new power online for AI data centers. Every month a facility sits waiting for power is a month that expensive chips, which make up most of your investment, are sitting idle doing nothing. That makes natural gas the practical choice for now: it's fast to deploy, scales well, and delivers reliable power.

Nuclear energy offers clean power with low operating costs, but construction takes forever and requires huge upfront investment. Solar and wind can be built relatively quickly, but they need massive amounts of land and labor — the report notes that powering a single one-gigawatt AI data center might require a solar farm the size of Manhattan.

Peter Smith

Peter Smith

Peter Smith

Peter Smith