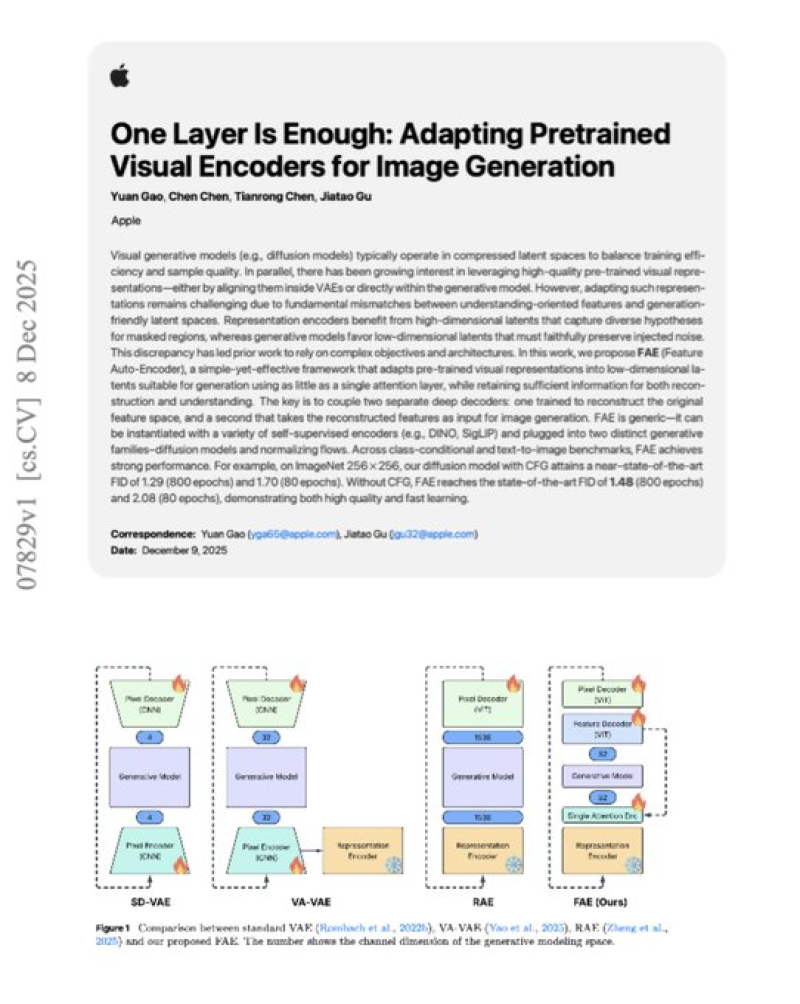

⬤ Apple researchers published a study that shows a single attention layer turns frozen vision features into strong image generators. Their paper of 8 December 2025 presents a Feature Auto-Encoder that re uses existing visual encoders instead of training new ones.

⬤ The method addresses a core problem in generative modelling - the gap between understanding pictures and creating them. Standard diffusion models rely on deep, specialised networks that raise both training effort and compute cost.

⬤ FAE splits the task in two. A frozen encoder keeps its weights - a light decoder plus one attention layer handle reconstruction plus generation. The encoder structure stays intact - yet it now outputs images.

⬤ The team evaluated FAE on CIFAR-10 LSUN ImageNet and text-to-image tasks. With fewer training steps it matched or surpassed standard diffusion results indicating that future systems can recycle existing representations instead of growing ever larger.

Peter Smith

Peter Smith

Peter Smith

Peter Smith