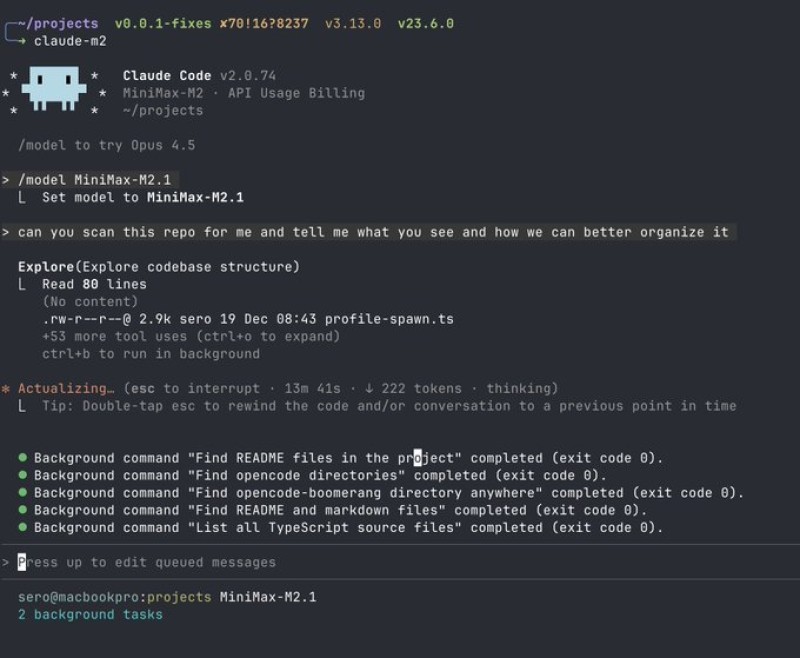

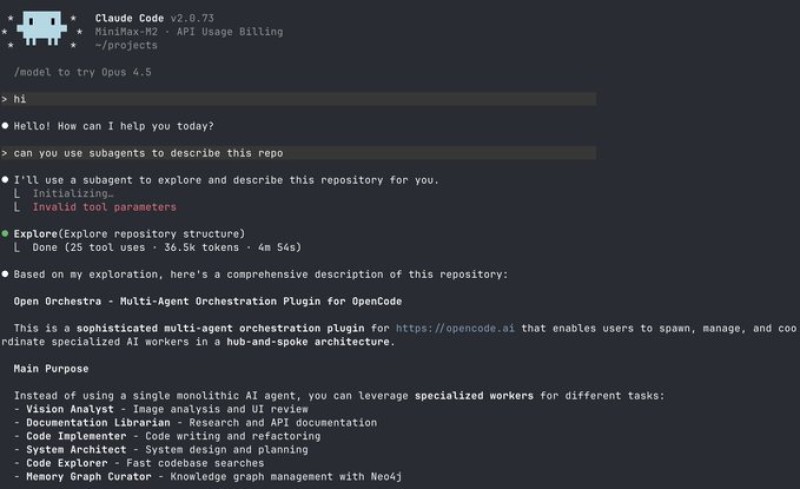

⬤ MiniMax-M2.1 is turning heads after a direct comparison revealed major improvements in how it handles subagents within Claude Code CLI. The test put both MiniMax-M2.1 and MiniMax-M2 through identical repository exploration tasks on the same day. Screenshots from the demonstration show MiniMax-M2.1 smoothly coordinating multiple subagents working in parallel, while M2 crashed during tool execution after managing just one subagent.

⬤ The first screenshot captures MiniMax-M2.1 navigating a code repository inside Claude Code CLI. Terminal output confirms several background commands completed without issues—scanning README files, pulling documentation, listing TypeScript source files. What's striking is that these tasks ran simultaneously, meaning five subagents were launched and managed seamlessly, each tackling its own piece of the puzzle.

⬤ The second screenshot tells a different story. MiniMax-M2 attempted the same subagent-based exploration but hit an "Invalid tool parameters" error right out of the gate. The failure happened before multiple subagents could even deploy, completely blocking task decomposition. This side-by-side evidence makes it clear that MiniMax-M2.1 handles tool calls and multi-agent coordination far more reliably—at least in this scenario.

⬤ Why does this matter? As AI coding tools shift toward autonomous, multi-task workflows, dependable subagent orchestration becomes critical. When you're analyzing, refactoring, or documenting large codebases, the ability to delegate work across several agents directly impacts efficiency. Demonstrations like this suggest that incremental improvements in models like MiniMax-M2.1 could make multi-agent systems genuinely practical for real-world software development.

Usman Salis

Usman Salis

Usman Salis

Usman Salis