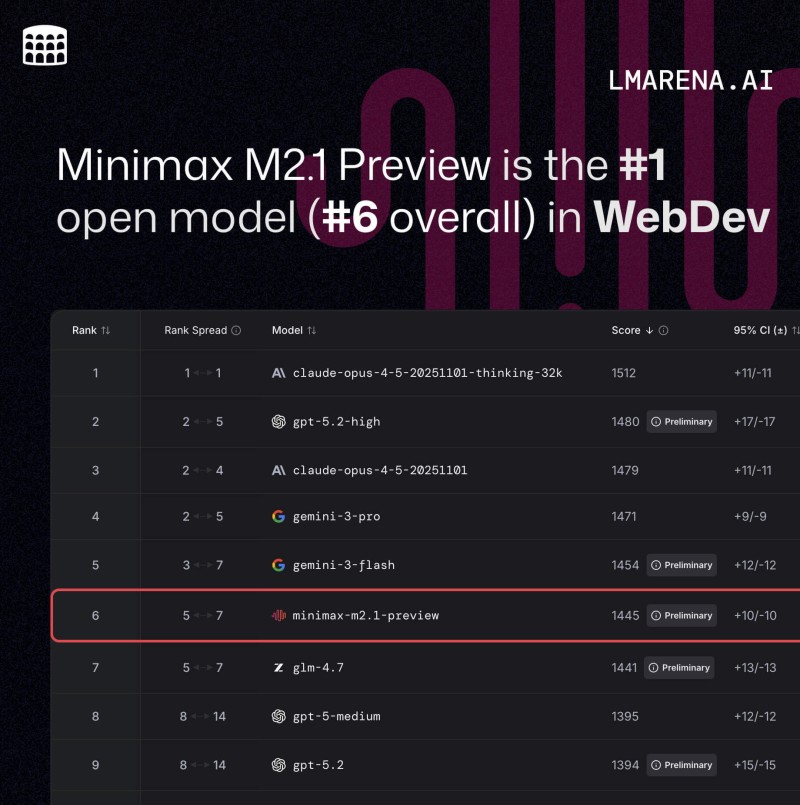

⬤ MiniMax AI is making waves after lmarena.ai announced their M2.1 Preview model topped the open-model category for WebDev on Code Arena's leaderboard. The model scored 1445 points, securing #6 in the overall rankings right next to GLM-4.7. What makes Code Arena interesting is that it doesn't rely on synthetic tests—instead, it evaluates AI by having models build actual websites, apps, and games from a single natural-language prompt, giving us a clearer picture of real-world coding chops.

⬤ Right now, Claude-Opus-4.5-Thinking leads the pack at 1512, with GPT-5.2-High at 1480 and Claude-Opus-4.5 at 1479 close behind. Google's Gemini-3-Pro sits at #4 with 1471, and Gemini-3-Flash holds #5 at 1454. MiniMax M2.1 Preview landed just after these heavyweights, making it the strongest open WebDev model in the competition. The score carries a preliminary confidence interval, which is standard for Code Arena's reporting. What's really noteworthy here is how quickly open models are catching up to the top proprietary systems in practical coding tasks.

⬤ You can now test MiniMax M2.1 directly inside Code Arena and compare it head-to-head with other leading models. Battle Mode voting is still running, so the community can weigh in on rankings, with updated results coming soon. Since the competition focuses on building working software from one prompt, the WebDev leaderboard has become a go-to metric for measuring real-world AI development skills.

⬤ This matters because competitive benchmarks shape how we see AI capabilities and influence which technologies gain traction. MiniMax M2.1's strong showing proves that open-access models keep getting better, driving broader innovation and heating up competition across the entire AI space.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah