⬤ Liquid AI just dropped something interesting with their LFM2-2.6B-Exp—a compact 3B-class model that punches way above its weight. What makes it different? They've baked reinforcement learning right into the training process, combining it with what they call "dynamic hybrid reasoning." Built on top of their pre-trained LFM2-2.6B foundation, this experimental model stays small enough for edge devices while getting smarter at specific tasks through RL with verifiable rewards.

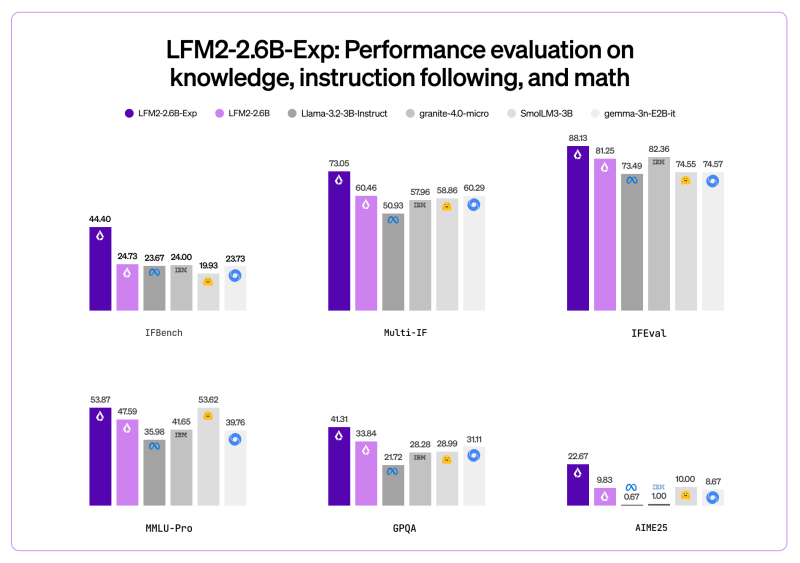

⬤ The technical setup is pretty slick. LFM2-2.6B-Exp uses short-range LIV convolutions paired with grouped query attention, which helps it process information more efficiently. It handles a 32,768 token context window and was trained on a massive 10 trillion tokens. Here's where it gets interesting: on IFBench testing, this little 3B model actually beats DeepSeek R1 0528 and other much larger competitors. That performance makes it surprisingly useful for agentic systems and structured data extraction—tasks you'd normally need bigger models for.

⬤ What really matters is where this thing can actually work. LFM2-2.6B-Exp is designed to run on-device, meaning it could power smart assistants on your phone or tablet without needing cloud processing. It's good at following instructions and pulling out specific information while staying efficient enough for consumer hardware. That's the real story here—we're seeing smaller models that can actually do useful work locally instead of depending on massive data centers.

⬤ This release shows where AI development is heading. Instead of just building bigger and bigger models, companies like Liquid AI are figuring out how to make smaller ones smarter through techniques like reinforcement learning. If this trend continues, we'll see more capable AI running directly on mobile devices, smart home systems, and autonomous platforms without the latency and privacy concerns of cloud-based processing.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov