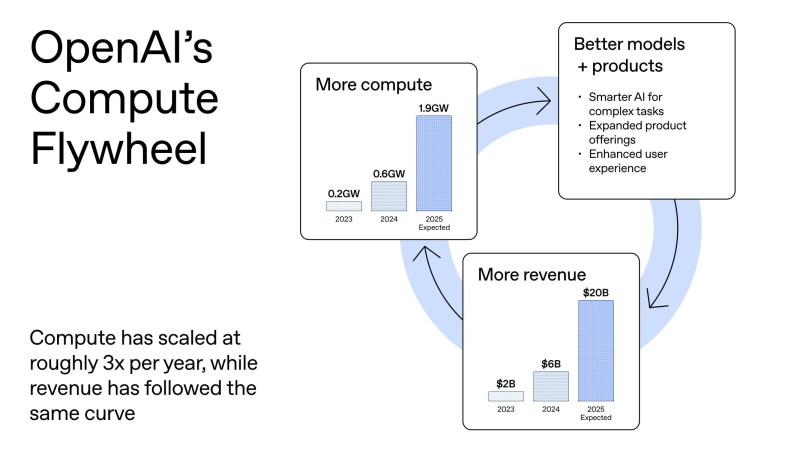

⬤ OpenAI has laid out its compute-driven growth engine, showing how capital flows into infrastructure that powers better AI models, which then generate more revenue to fuel the cycle. The company's compute capacity jumped from 0.2 gigawatts in 2023 to 0.6 gigawatts in 2024, with projections hitting 1.9 gigawatts in 2025. This isn't gradual scaling—it's exponential growth designed to keep infrastructure ahead of product ambitions, making compute the foundation of everything OpenAI builds.

⬤ Revenue numbers track perfectly with compute expansion. OpenAI went from $2 billion in revenue during 2023 to $6 billion in 2024, with $20 billion projected for 2025. That's a 3x annual multiplier on both compute and revenue, showing infrastructure investment directly converts into commercial results. The tighter these curves align, the clearer it becomes that more compute equals better products equals higher revenue.

⬤ OpenAI's leadership is making it clear: demand isn't the problem. The constraint is infrastructure—specifically how fast they can build data centers, secure power, and deploy hardware. The flywheel model suggests the company has plenty of ideas and market appetite, but growth now depends on winning the race for compute capacity. Product launches are getting delayed not because features aren't ready, but because the infrastructure to support them isn't live yet.

⬤ This shift reflects industry-wide competition for compute resources. With AI companies facing a projected global compute shortage in 2025, access to power and hardware has become a strategic chokepoint. OpenAI is signaling that future progress hinges less on algorithmic breakthroughs and more on securing the physical infrastructure needed to run increasingly demanding systems at scale.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov