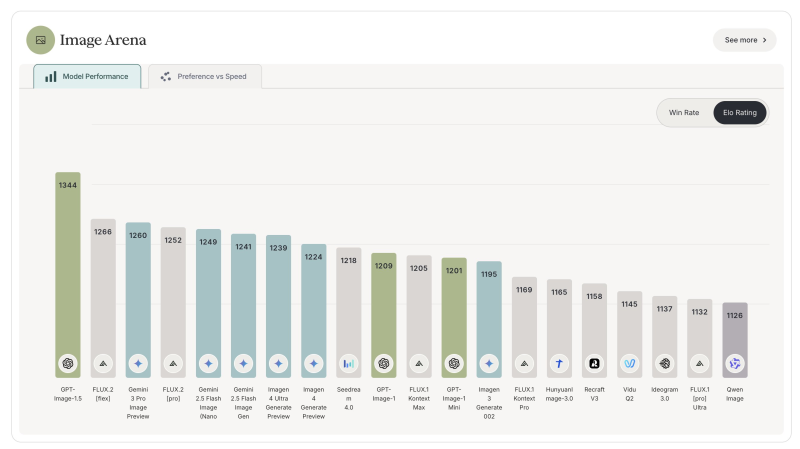

⬤ OpenAI's GPT-Image-1.5 has taken over the top spot on Image Arena, a platform where AI image models compete based on what users actually prefer. The new model shot up nine places compared to GPT-Image-1, showing a pretty impressive leap in how it stacks up against other image generators. Image Arena ranks these tools using Elo ratings—basically the same scoring system chess players use—so the numbers reflect real head-to-head matchups where users pick which images they like better.

⬤ Right now GPT-Image-1.5 sits at the top with an Elo score around 1344. Flux.2 comes in second at roughly 1266, and Nano Banana Pro holds third place. Google's lineup—including Gemini 3 Pro Image Preview and various Imagen models—clusters just below the podium. It's worth noting these rankings capture overall user preferences rather than measuring specific capabilities like prompt accuracy or style consistency.

⬤ The new rankings sparked plenty of debate in AI circles. Some observers questioned whether the gap between Flux.2 and Nano Banana Pro really reflects the quality difference most people see in practice. The pushback points to lingering doubts about preference-based benchmarks—especially when tiny Elo differences can shuffle the entire leaderboard and create outsized reputational impact for the models involved.

⬤ This latest shakeup shows just how competitive AI image generation has become, with new models constantly challenging whoever's on top. Public leaderboards like Image Arena are increasingly driving where developers focus their energy and shaping market buzz around these platforms. At the same time, the mixed reactions reveal ongoing uncertainty about whether current evaluation methods truly capture what makes one image generator better than another in real-world u

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi