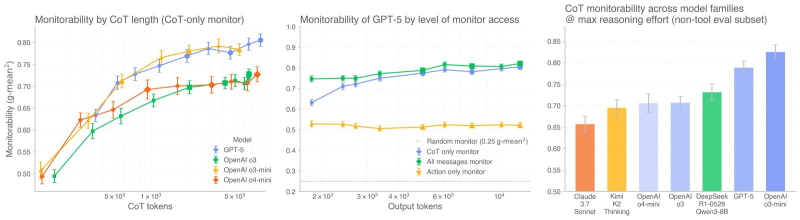

⬤ OpenAI just dropped fascinating research called "Monitoring Monitorability" that flips conventional thinking on its head. Instead of longer AI reasoning chains making oversight tougher, they actually make it easier to track what's happening under the hood. This is huge news for anyone worried about keeping tabs on increasingly sophisticated AI systems, especially as transparency keeps dominating industry conversations.

⬤ The data tells a pretty clear story: as chain-of-thought token length increases, monitorability scores climb right along with it across GPT-5, OpenAI o3, OpenAI o3-mini, and OpenAI o4-mini. What's equally interesting is that heavy-duty reinforcement learning training doesn't seem to hurt transparency at all. So basically, making models smarter through advanced optimization doesn't automatically make them more opaque—which should ease some concerns about AI becoming a black box.

⬤ When comparing different models head-to-head, GPT-5 and OpenAI o3-mini scored particularly high on monitorability when running at full reasoning capacity, outperforming several competing AI families in the evaluation. Bottom line: longer reasoning chains don't hide what AI is doing—they actually give us better visibility into how these systems reach their conclusions.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov