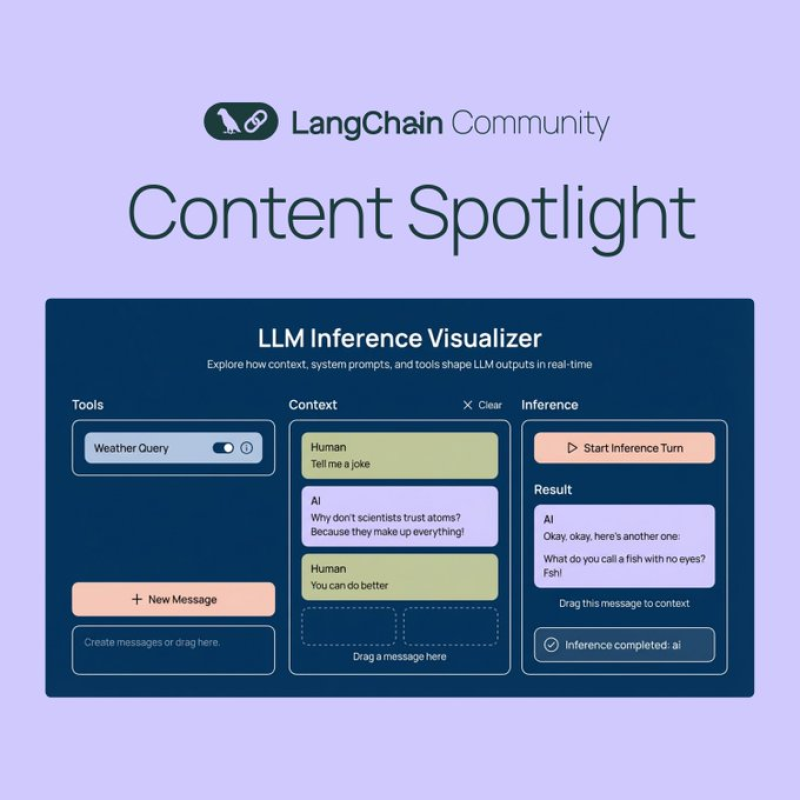

⬤ LangChain, one of the go-to platforms for building AI models, just launched something pretty cool: the LLM Inference Visualizer. It's basically an interactive playground where you can watch how things like context, system prompts, and tool workflows mess with what large language models actually produce.

⬤ Here's where it gets interesting—you can literally drag and drop messages around and see what happens. It's like having a control panel for AI behavior. The tool uses LangChain's message types to break down how everything clicks together, so developers and researchers can actually see what's going on under the hood instead of just guessing.

⬤ It gives developers a way sharper way to tweak and optimize their LLM projects. Whether you're building content generators, chatbots, or more complex AI systems, being able to watch the model think in real time means you can spot problems faster and build smarter solutions without all the trial-and-error headaches.

Usman Salis

Usman Salis

Usman Salis

Usman Salis