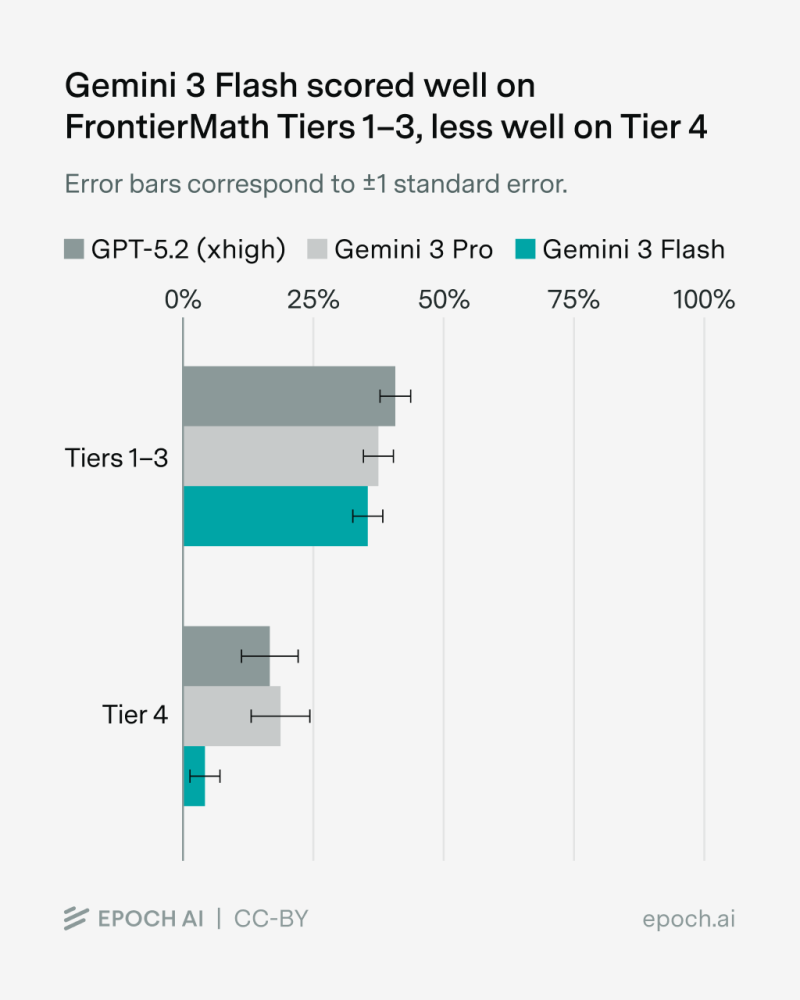

⬤ Fresh benchmark data from Epoch AI reveals how Google's Gemini 3 Flash handles advanced math problems. The model scored 36% on FrontierMath Tiers 1–3, matching the performance of leading AI systems on these levels. However, it fell behind significantly on Tier 4, the benchmark's hardest category. The results, shared on X by @EpochAIResearch, include direct comparisons with Gemini 3 Pro and GPT-5.2 (xhigh).

⬤ The data shows Gemini 3 Flash landing in the mid-30% range for Tiers 1–3, putting it right alongside other top performers. GPT-5.2 (xhigh) edges ahead on these tiers, with Gemini 3 Pro sitting just below, while Gemini 3 Flash stays competitive within the margin of error. Epoch AI included error bars representing plus or minus one standard error, showing the models are fairly close on these intermediate challenges.

⬤ The gap widens dramatically on Tier 4. Here, Gemini 3 Flash scores noticeably lower than both GPT-5.2 (xhigh) and Gemini 3 Pro, landing near the bottom of the chart for the toughest math problems. This confirms what Epoch AI highlighted—while the model handles standard and intermediate tasks well, it struggles when pushed to the absolute frontier of mathematical reasoning.

⬤ These findings show just how much performance can shift across difficulty levels in cutting-edge AI benchmarks. Gemini 3 Flash proves it can tackle a broad range of math challenges, but the Tier 4 results make clear there's still work to do at the highest complexity levels. For Alphabet, it's a mixed picture: solid progress within the Gemini lineup, but a persistent gap at the top as competition among leading models increasingly centers on advanced reasoning capabilities.

Sergey Diakov

Sergey Diakov

Sergey Diakov

Sergey Diakov