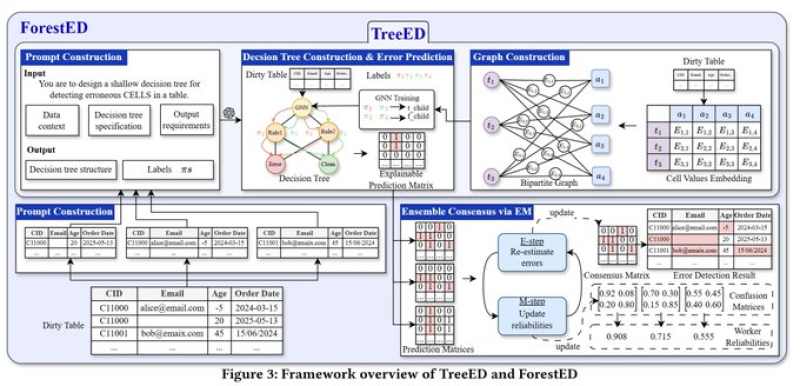

⬤ A groundbreaking academic paper introduces ForestED, a data-cleaning system that fundamentally changes how AI handles error detection in datasets. Instead of letting large language models directly label errors—which creates unpredictable results and racks up costs—ForestED puts LLMs to work as system architects. The framework uses decision trees, graph modeling, and ensemble methods to replace black-box prompting with transparent, traceable logic.

⬤ The core innovation tackles a major LLM weakness: when models inspect data cells directly, they introduce randomness and deliver results that are impossible to audit. ForestED flips this approach by having the LLM create decision trees from sampled data first. These trees combine rule-based checks, graph neural networks, and specialized classifiers, turning messy tables into structured graphs. Every detected error can be traced back through a clear decision path, making the whole process auditable.

⬤ Stability comes from ensemble thinking. Rather than trusting a single LLM-generated tree, ForestED builds multiple trees using uncertainty-based sampling, then runs an Expectation-Maximization process to find consensus. Each tree gets weighted by reliability, filtering out flawed logic automatically. The system also slashes token costs by limiting LLM involvement to the design phase rather than execution.

⬤ ForestED points toward a bigger shift in AI architecture. By moving from "LLM-as-Operator" to "LLM-as-Engineer," it proves that combining symbolic logic, neural networks, and probabilistic consensus creates more dependable systems. For enterprises dealing with large, error-filled datasets, this framework offers a practical path to both accuracy and transparency.

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova