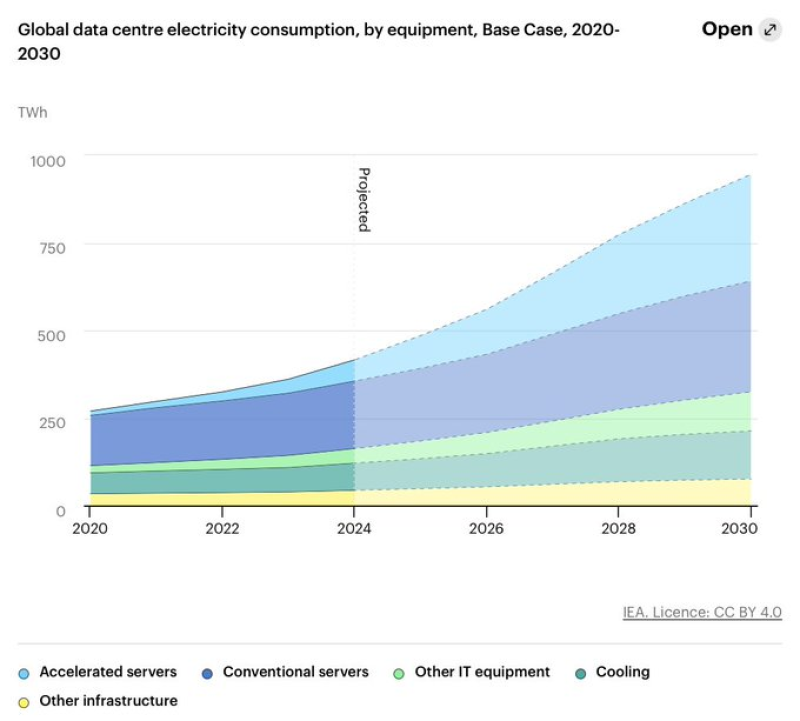

⬤ Data centers worldwide are heading toward a massive power crunch. The International Energy Agency's latest projections show electricity consumption jumping from around 300 terawatt-hours in 2020 to nearly 945 TWh by 2030. AI workloads are the main culprit behind this dramatic spike, with demand really taking off after 2024 in a way we haven't seen before.

⬤ Looking at what's actually eating up all this electricity, accelerated servers used for AI tasks are far outstripping everything else. Traditional servers, cooling systems, and other IT equipment can't compete with the power-hungry nature of AI training and inference operations. Cloud computing and high-density computing setups just keep pushing those numbers higher, and they all need electricity flowing 24/7 without interruption.

Until fusion energy becomes commercially viable, the projected growth in data centre electricity demand underscores a significant energy bottleneck that is likely to shape infrastructure investment and global competitiveness in advanced computing.

⬤ Here's where things get tricky: data centers can't afford downtime, which means they need constant power. Solar and wind sound great in theory, but they're intermittent by nature. Without massive battery storage systems that don't really exist at scale yet, renewables alone can't cut it. That's pushing nuclear power back into the spotlight as the only low-carbon option that delivers steady electricity around the clock.

⬤ Nuclear isn't perfect—building plants takes forever, you're dependent on uranium mining, and nobody's really solved the waste storage problem. But for countries serious about staying competitive in the AI race, it's the best option available right now. This energy bottleneck isn't going away anytime soon, and it's going to force some hard decisions about infrastructure spending and energy strategy in the coming years.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah