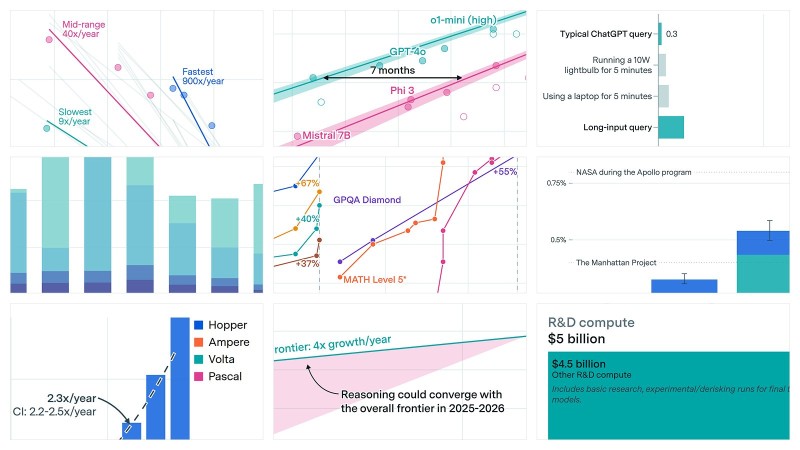

⬤ Epoch AI published a retrospective review analyzing its most-read research from 2025, revealing just how dramatically large language models evolved throughout the year. The accompanying charts paint a striking picture of exponential development, showing performance leaps that previously took years now happening within months. The analysis draws from 36 Data Insights and 37 Gradient Updates newsletters published during 2025.

⬤ Readers gravitated toward posts tracking model capability growth, reasoning performance, and scaling trends—and the data tells a compelling story. Charts show steep upward curves across multiple benchmarks, particularly in advanced reasoning tasks and mathematical problem-solving. What makes these visuals remarkable is the compression of progress: successive model generations are improving at speeds that fundamentally reshape development timelines and industry expectations.

⬤ Beyond raw capability, efficiency gains stand out as a major theme. Energy consumption per query has dropped significantly compared to earlier model generations, even as performance climbs higher. Hardware and compute scaling charts reveal steady improvements driven by better accelerators and smarter optimization techniques. The data suggests recent progress stems not just from building bigger models, but from training and running them far more efficiently.

⬤ This 2025 snapshot matters because it quantifies how rapidly the AI frontier is shifting. Exponential gains in performance, reasoning, and resource efficiency are fundamentally changing what LLMs can accomplish and how quickly they evolve. Epoch AI's retrospective offers concrete evidence of sustained innovation acceleration, providing crucial context for understanding research priorities, infrastructure needs, and competitive dynamics as large language models continue their rapid advancement into 2026.

Usman Salis

Usman Salis

Usman Salis

Usman Salis