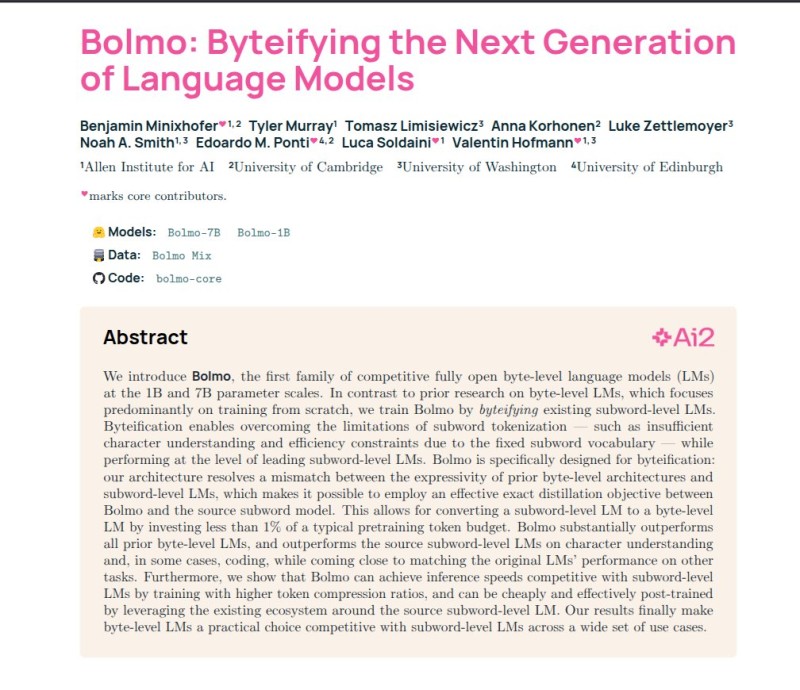

⬤ A research team from the Allen Institute for AI, the University of Cambridge, and partner institutions has released Bolmo, a new family of byte-level language models available in 1B and 7B parameter versions. Instead of training from scratch, Bolmo "byteifies" existing subword-based models through an efficient conversion process. The approach transforms token-based systems into byte-level models with minimal additional training, as detailed in their newly published research paper.

⬤ Traditional language models depend on fixed subword vocabularies, which create problems with exact spelling, struggle with uncommon or multilingual text, and behave unpredictably at word boundaries. Bolmo sidesteps these issues by working directly with UTF-8 bytes—the raw numerical text representation. To keep things efficient, the model learns to merge bytes into compact patches, preventing sequence lengths from exploding. A specialized boundary module lets the system peek one byte ahead during processing, helping align patch boundaries with actual linguistic units like words, symbols, and punctuation marks.

⬤ The training process happens in two stages and dramatically cuts computational costs. First, the Transformer core from the original subword model stays frozen while new byte-level components learn through distillation, essentially copying the source system's behavior. Second, the entire model gets fine-tuned together. This whole process uses less than 1% of the tokens needed for standard pretraining. The results are impressive: Bolmo crushes previous byte-level models on character-level tasks and coding benchmarks while staying competitive with the original subword models across other evaluations.

⬤ Bolmo proves that byte-level language models can be both practical and competitive without sacrificing openness. By combining efficient distillation, specialized byte-processing architecture, and open licensing, the project establishes byte-level modeling as a legitimate alternative to traditional subword methods. The findings point toward a future where more language models might adopt byte-level representations for better robustness, multilingual capabilities, and accessibility in open research.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah